Install PVE/PBS from ISO on Hetzner without KVM (Tutorial)

- Thread starter MaLe

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

yep - would be interested in your setup...This is btw one piece of a complete cloud workplace solution for small business companies, based on Proxmox and the power of several other Linux products. In my case, it needs only one comercial license for a Windows terminal server that runs the applications. All the other stuff, like domain controller, file server, firewall and VPN is build on open source and easy to administrate. So if anyone need to build something similar, don't hesitate to get in touch with me. I would be happy to share my work

Yes, but with "Installimage" you have to use their prepaired disk setup. RAID is possible but as far as I know only with Linux RAID. If you want to use ZFS on your (boot) disks, you have to install via ISO.Also, Hetzner has three Proxmox versions in theirinstallimagetool.

Kinda gave up using the iso installer. The installation worked flawlessly on my auction dedicated AMD server, but after reboots the server was just not reachable.

I had this set up:

And without even an additional private network set up, server still wouldn't respond. Couldn't even ping it.

I had this set up:

Code:

auto enp35s0

iface enp35s0 inet static

address mainip

netmask 255.255.255.192

gateway gateway

up route add -net mainip netmask 255.255.255.192 gw gateway dev enp35s0```Kinda gave up using the iso installer. The installation worked flawlessly on my auction dedicated AMD server, but after reboots the server was just not reachable.

I had this set up:

And without even an additional private network set up, server still wouldn't respond. Couldn't even ping it.Code:auto enp35s0 iface enp35s0 inet static address mainip netmask 255.255.255.192 gateway gateway up route add -net mainip netmask 255.255.255.192 gw gateway dev enp35s0```

Maybe the server need EFI boot. You could try the EFI setup, explained somewhere above in this thread:

Code:

wget http://download.proxmox.com/iso/proxmox-ve_8.x-x.iso

wget -qO- /root http://www.danpros.com/content/files/uefi.tar.gz | tar -xvz -C /root

qemu-system-x86_64 -enable-kvm -smp 4 -m 4096 -boot once=d -cdrom ./proxmox-ve_8.x-x.iso -drive file=/dev/nvme0n1,format=raw,media=disk,if=virtio -drive file=/dev/nvme1n1,format=raw,media=disk,if=virtio -vnc 127.0.0.1:1 -bios /root/uefi.binOK, I didn't hear about that issues. I always used ZFS RAID1 for the setup of many servers with 2 disks, and I've never experienced any issues. On the Hetzner rescue console, did you double-check the correct interface name of the network card? enp35s0 sounds a bit unusual, most of the AX series using enp7s0 or enp8s0.

I'm pretty sure:OK, I didn't hear about that issues. I always used ZFS RAID1 for the setup of many servers with 2 disks, and I've never experienced any issues. On the Hetzner rescue console, did you double-check the correct interface name of the network card? enp35s0 sounds a bit unusual, most of the AX series using enp7s0 or enp8s0.

Code:

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether ipv6

altname enp35s0

inet mainip/26 scope global eth0

valid_lft forever preferred_lft forever

inet6 ipv6/64 scope global

valid_lft forever preferred_lft forever

inet6 ipv6/64 scope link

valid_lft forever preferred_lft foreverAccording to the rescue, I don't have EFI -> EFI variables are not supported on this system

But I've tried both and without EFI. And without an kvm console, I'm not 100% sure what's going on.

Last edited:

Sounds strange. I would recommend opening a ticket via Hetzner Robot and order a KVM console for free. So you can check if the server has trouble with the boot process or if there is any other issue.

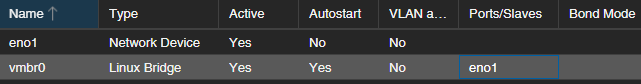

Well, through KVM it works fine:

interfaces settings I use atm:

Using this due to: https://github.com/ariadata/proxmox-hetzner

I used to have enp35s0 set to static with the address / gateway there:

Got this info straight from debian 12 installation + proxmox on top of that. Used the exact info on a proxmox OS installation after editing the interfaces. But even then the server appears to be dead (network related I would assume).

So network related.

Might as well give up on using the proxmox OS and simply use a clean debian 12 and install proxmox on top of it....

interfaces settings I use atm:

Using this due to: https://github.com/ariadata/proxmox-hetzner

I used to have enp35s0 set to static with the address / gateway there:

Code:

auto lo

iface lo inet loopback

iface lo inet6 loopback

auto enp35s0

iface enp35s0 inet static

address xxxx

netmask xxxx

gateway xxxx

# route xxxx via xxxx

up route add -net xxxx netmask xxxx gw xxxx dev enp35s0

iface enp35s0 inet6 static

address xxxxx

netmask 64

gateway xxxxx

So network related.

Might as well give up on using the proxmox OS and simply use a clean debian 12 and install proxmox on top of it....

Attachments

Last edited:

Don't give up, I didn't and after some failures was able to install PVE 7 from ISO and just distr-upgrade to 8. All fine now. I can look tomorrow on network name, cause I had same issues, and the problem is in interface name, it's not enp**Well, through KVM it works fine:

interfaces settings I use atm:

View attachment 62229

Using this due to: https://github.com/ariadata/proxmox-hetzner

I used to have enp35s0 set to static with the address / gateway there:

Got this info straight from debian 12 installation + proxmox on top of that. Used the exact info on a proxmox OS installation after editing the interfaces. But even then the server appears to be dead (network related I would assume).Code:auto lo iface lo inet loopback iface lo inet6 loopback auto enp35s0 iface enp35s0 inet static address xxxx netmask xxxx gateway xxxx # route xxxx via xxxx up route add -net xxxx netmask xxxx gw xxxx dev enp35s0 iface enp35s0 inet6 static address xxxxx netmask 64 gateway xxxxx

View attachment 62231

So network related.

Might as well give up on using the proxmox OS and simply use a clean debian 12 and install proxmox on top of it....

That's interesting. Then why would the interface be set to enp upon installation from eg debian?Don't give up, I didn't and after some failures was able to install PVE 7 from ISO and just distr-upgrade to 8. All fine now. I can look tomorrow on network name, cause I had same issues, and the problem is in interface name, it's not enp**

Last edited:

Sory for late responseGot it to (semi) work.. finally..

My command

Bash:

udevadm test-builtin net_id /sys/class/net/eth0 | grep '^ID_NET_NAME_PATH'enp0s31f6

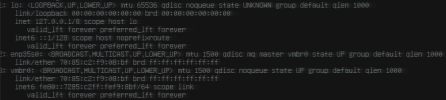

But in reality, as you see below, i-face name was realy far from that result...

This is the interface name, that was able to acces the server...

Thanks for the reply.Sory for late response

My command

Returned meBash:udevadm test-builtin net_id /sys/class/net/eth0 | grep '^ID_NET_NAME_PATH'

enp0s31f6

But in reality, as you see below, i-face name was realy far from that result...

View attachment 62361

This is the interface name, that was able to acces the server...

Code:

Loading kernel module index.

Found cgroup2 on /sys/fs/cgroup/, full unified hierarchy

Found container virtualization none.

Using default interface naming scheme 'v252'.

Parsed configuration file "/usr/lib/systemd/network/99-default.link"

Parsed configuration file "/usr/lib/systemd/network/73-usb-net-by-mac.link"

Created link configuration context.

enp35s0: MAC address identifier: hw_addr=xxxxx → xxxxxx

sd-device: Failed to chase symlinks in "/sys/devices/pci0000:00/0000:00:01.3/0000:03:00.2/0000:20:01.0/0000:23:00.0/of_node".

sd-device: Failed to chase symlinks in "/sys/devices/pci0000:00/0000:00:01.3/0000:03:00.2/0000:20:01.0/0000:23:00.0/physfn".

enp35s0: Parsing slot information from PCI device sysname "0000:23:00.0": success

enp35s0: dev_port=0

enp35s0: PCI path identifier: domain=0 bus=35 slot=0 func=0 phys_port= dev_port=0 → p35s0

Unload kernel module index.

Unloaded link configuration context.

ID_NET_NAME_PATH=enp35s0As my post above. I do have access to proxmox GUI now through 8006, but it has no internet connection for some reason? Yet I can connect to it?

EDIT:

Works now. DNS was set to a private ip range. Changed that through proxmox and it now fully works.

Last edited:

Hello, I must have tried to install proxmox 10 times today. I don't know what I'm doing wrong, but as soon as I leave the rescue mode, I can neither ping the server nor reach it in any other way.

I have also tried this script, it ran through without errors and at the end it says after the restart the server is reachable under xxx, but after the restart I have no connection.

I have also tried this script, it ran through without errors and at the end it says after the restart the server is reachable under xxx, but after the restart I have no connection.

Hi. I don't know this script, but for the way I suggested I can provide an updated procedure thats working for all current servers on Hetzner. To do that, please figure out what kind of boot process your machine is using. All the current servers are usally working with EFI boot, but some of the older one from the Server Auction may use legacy boot.Hello, I must have tried to install proxmox 10 times today. I don't know what I'm doing wrong, but as soon as I leave the rescue mode, I can neither ping the server nor reach it in any other way.

I have also tried this script, it ran through without errors and at the end it says after the restart the server is reachable under xxx, but after the restart I have no connection.

To do that, please figure out what kind of boot process your machine is using.

root@rescue ~ # efibootmgr

EFI variables are not supported on this system.

I have tried your instructions and 4-5 others including the script.

Last edited:

OK, seems you system ist using legacy boot. You can verify this by booting into rescue console, login to the system with ssh. After login there comes a welcome message with several information about the system. The boot type is reported at the top of the welcome message.I have tried your instructions and 4-5 others including the script.

I will have a new script available to you later today.