so what do i do ? What can i do ?Consumer SSDs that can't cache sync writes of a DB because of missing power-loss protection? At least when it comes to small random sync writes a consumer SSD isn't much faster than a HDD (for example 400 IOPS vs 100 IOPS).

Insane high IO delay without reason

- Thread starter spleenftw

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Reduce the I/O load or buy new SSDs.so what do i do ? What can i do ?

i mean, i'm tring to reduce the i/o load but i kinda don't have others solutions than stopping some VM ?Reduce the I/O load or buy new SSDs.

No, you have to reduce them, e.g. by optimizing theit I/O pattern.i mean, i'm tring to reduce the i/o load but i kinda don't have others solutions than stopping some VM ?

e.g. an influxdb does write flushed, and you need to increase the interval of such flushes in order to combine more of them into one and so reduce the iops from a lot of small ones to less and bigger ones. This is true for any flushing/syncing workload. Every database I know has special chapters in their respective documentation on tuning so that you need to find out which machines is the worst and first optimize it ... or buy SSDs that are built for such workloads (enterprise SSDs). As @Dunuin already pointed out are consumer and prosumer SSDs not good for such workloads despite what the vendors claim. They are good as long as you don't have a mixed read/write workload in which one reaching a wall, they will tremendously worsen in performance. In the enterprise field, you normally have guaranteed response times, which are ensures by special firmware so that you can plan with your IOPS, all consumer/prosumer hardware is still optimized for other workloads.

@spleenftw Have you made any other progress on this? We are seeing a very similar situation on two of our cluster nodes. High I/O Wait and system Load. It doesn't seem to matter which VMs are running on those nodes, the more VMs the higher the load, but the load is MUCH higher than it was about 2 months ago with no changes to our VM workloads. We noticed it starting to happen Around August18/19 which could have coincided with a proxmox node update.

The dashboard shows the spike we saw around that time (no changes to vm and no ongoing backups/syncs/scrubs/etc):

The spike of load only ended after we migrated the VMs to other nodes. Each time we try to migrate vms back the load spikes again. Smartctl doesn't show any issues on the drives (which are Samsung QVO 870 SSDs - not the greatest drives, but they had been performing just nicely prior to the issue). The drives are only about 60% full. Due to the fact that we are seeing this on two separate nodes, it seems to indicate that it is not a drive issue. Hopefully some of this anecdotal evidence helps you out (assuming we are having similar issues).

The dashboard shows the spike we saw around that time (no changes to vm and no ongoing backups/syncs/scrubs/etc):

The spike of load only ended after we migrated the VMs to other nodes. Each time we try to migrate vms back the load spikes again. Smartctl doesn't show any issues on the drives (which are Samsung QVO 870 SSDs - not the greatest drives, but they had been performing just nicely prior to the issue). The drives are only about 60% full. Due to the fact that we are seeing this on two separate nodes, it seems to indicate that it is not a drive issue. Hopefully some of this anecdotal evidence helps you out (assuming we are having similar issues).

Last edited:

Maybe because such a HDD actually got a better write performance than the QLC SSDs at specific workloads like doing sequential writes? The smaller QVOs cap at 40MB/s.@Dunuin No. We have 3 nodes. Two with SSDs that are showing the issue and the other is a 10k HDD not displaying the issue.

Last edited:

That is probably the case. The thing that I don't understand is why the large change in performance on the SSD machines. The average load went from 1-2 up to 7-8. Even light workloads now seem to cause high load. File transfers from the vms often drop to just a few MB/s (under 10MB/s) without any heavy processes showing in `top`, `iotop`, or `htop` during those times.

Hey, litteraly got the same issue after proxmox update on my nodeThat is probably the case. The thing that I don't understand is why the large change in performance on the SSD machines. The average load went from 1-2 up to 7-8. Even light workloads now seem to cause high load. File transfers from the vms often drop to just a few MB/s (under 10MB/s) without any heavy processes showing in `top`, `iotop`, or `htop` during those times.

I didn't progress at all since I'm not sure about what I can do tho

Last edited:

Thanks for following up. If you haven't come across this, it may be worth a try for you:

https://www.reddit.com/r/Proxmox/comments/dufmli/terrible_disk_write_speed_on_zfs_mirrored_ssds/

Unfortunately I didn't have any bios options to enable/disable the write cache on the controller on my system.

https://www.reddit.com/r/Proxmox/comments/dufmli/terrible_disk_write_speed_on_zfs_mirrored_ssds/

Unfortunately I didn't have any bios options to enable/disable the write cache on the controller on my system.

I do not have any zfs mirror. I'm using mdadm on my two consumer SSD, but i'll try to check. (even tho i moved and left my servers at home with no screens lmao)Thanks for following up. If you haven't come across this, it may be worth a try for you:

https://www.reddit.com/r/Proxmox/comments/dufmli/terrible_disk_write_speed_on_zfs_mirrored_ssds/

Unfortunately I didn't have any bios options to enable/disable the write cache on the controller on my system.

Last edited:

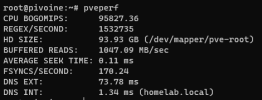

@spleenftw could you report what `pveperf` shows?

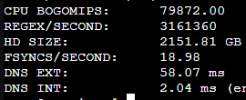

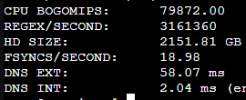

For me my `Fsyncs` is consistently under 20 (even slower than my old rust disks).

For me my `Fsyncs` is consistently under 20 (even slower than my old rust disks).

Last edited:

I just wanted to follow up on this since i was able to pinpoint the issue to slow sync writes and iops on my samsung qvo 870 drives (as was predicted by others earlier in this and other posts). I'm still not sure why the load averages started increasing significantly a few months ago, but if I `zfs set sync=disabled rpool` then the `fsyncs` metric is in the thousands and `fio` tests are comparable to the ones run in the proxmox zfs benchmark paper.

Now it's time to decide if we upgrade our drives or risk leaving sync disabled. (For anyone else considering leaving sync disabled, be sure you have good battery backups and data backups since corruption is significantly more likely)

For anyone interested in the fio tests with sync enabled vs disabled:

** WARNING: Do not run the commands in the screenshot above if you have data on your disks **

Now it's time to decide if we upgrade our drives or risk leaving sync disabled. (For anyone else considering leaving sync disabled, be sure you have good battery backups and data backups since corruption is significantly more likely)

For anyone interested in the fio tests with sync enabled vs disabled:

** WARNING: Do not run the commands in the screenshot above if you have data on your disks **

Last edited:

@spleenftw could you report what `pveperf` shows?

For me my `Fsyncs` is consistently under 20 (even slower than my old rust disks).

View attachment 57042

Last edited:

To compare my Intel S3610 Enterprise SATA-SSDs (pretty cheap these days) shows the following value for fsyncs (running zfs mirrored pool):

compared to the result of that QVO870 its only 300 times faster.

using enterprise ssds is really worth it, even if you use desktop-components for everything else (CPU, RAM, Network).

Code:

FSYNCS/SECOND: 6488.14compared to the result of that QVO870 its only 300 times faster.

using enterprise ssds is really worth it, even if you use desktop-components for everything else (CPU, RAM, Network).

18 times *To compare my Intel S3610 Enterprise SATA-SSDs (pretty cheap these days) shows the following value for fsyncs (running zfs mirrored pool):

Code:FSYNCS/SECOND: 6488.14

compared to the result of that QVO870 its only 300 times faster.

using enterprise ssds is really worth it, even if you use desktop-components for everything else (CPU, RAM, Network).

what do you have ? I'm kinda interested

18 times faster than yours, yes, but meichtys has fsyncs of 20 or less and there its 300 times faster18 times *

what do you have ? I'm kinda interested

the ssds i use are simple intel s3610 enterprise sata ssds (i run them as a zfs mirror on onboard sata connectors).

i usually buy mine off aliexpress (https://www.aliexpress.com/item/1005004979930308.html those are the ones im running).

they were brand new with 0 hours and no data written on them.

when i bought them they were 100 euros each.

the drives im using also have 10700 TBW vs the 720TBW for the 2TB 870QVO, so about 15 times more write endurance.