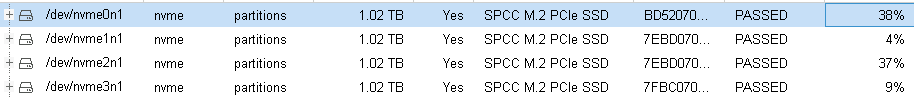

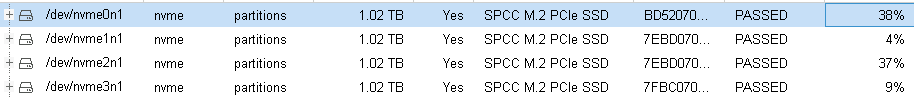

I have recently installed four NVMe SSDs in a Proxmox 6 server as a RAIDZ array, only to discover that according to the web interface two of the drives exhibit huge wearout only after a few weeks of use:

Since these are among the highest endurance consumer SSDs with 1665 TBW warranty for a terabyte capacity, I was curious to see the raw data, as it looked very strange that two (but only two) of these drives would have written over 600 TBs of data.

Fortunately, smartctl paints an entirely different picture, which is more in line with what was happening on this server:

Any idea what could be the cause of this discrepancy, and especially why two of the drives show wildly different wearout levels?

Since these are among the highest endurance consumer SSDs with 1665 TBW warranty for a terabyte capacity, I was curious to see the raw data, as it looked very strange that two (but only two) of these drives would have written over 600 TBs of data.

Fortunately, smartctl paints an entirely different picture, which is more in line with what was happening on this server:

Bash:

root@proxmox:~# smartctl -x /dev/nvme0n1 |grep Units

Data Units Read: 27,648,484 [14.1 TB]

Data Units Written: 41,208,339 [21.0 TB]

root@proxmox:~# smartctl -x /dev/nvme1n1 |grep Units

Data Units Read: 22,518,995 [11.5 TB]

Data Units Written: 39,442,838 [20.1 TB]

root@proxmox:~# smartctl -x /dev/nvme2n1 |grep Units

Data Units Read: 27,764,384 [14.2 TB]

Data Units Written: 41,089,460 [21.0 TB]

root@proxmox:~# smartctl -x /dev/nvme3n1 |grep Units

Data Units Read: 22,794,746 [11.6 TB]

Data Units Written: 39,431,075 [20.1 TB]Any idea what could be the cause of this discrepancy, and especially why two of the drives show wildly different wearout levels?

Last edited: