Hi

I had issues upgrading my Proxmox from v7 to v8.

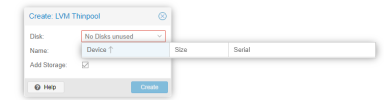

I now backed up all disks of the container to an external storage and want to import them now. But when I try to copy the disk it tells that the disk is full and there is no space left, even if there is nothing att all only proxmox itself. I'm a bit confused why there is no space left. Does proxmox reserve all space on the harddisk?

Also I cannot see any resource where I could assign new containers or VM to.

Help please, I'm really confused and just about installing v7 again to try it with that one.

kind regards

mcflux

I had issues upgrading my Proxmox from v7 to v8.

I now backed up all disks of the container to an external storage and want to import them now. But when I try to copy the disk it tells that the disk is full and there is no space left, even if there is nothing att all only proxmox itself. I'm a bit confused why there is no space left. Does proxmox reserve all space on the harddisk?

Also I cannot see any resource where I could assign new containers or VM to.

Help please, I'm really confused and just about installing v7 again to try it with that one.

kind regards

mcflux