Good day I was wondering how to get rid of this error.

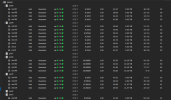

Currently everything is operating as normal.

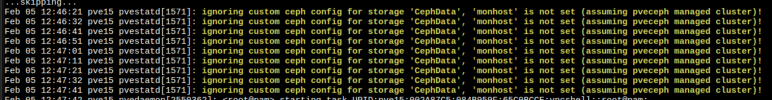

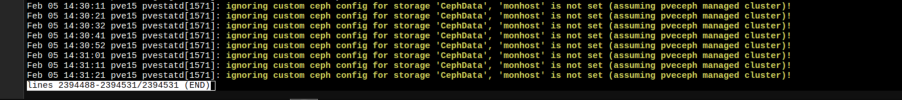

Jun 28 14:37:01 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:37:12 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:37:21 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:37:31 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:37:41 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:37:51 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:38:01 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:38:11 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:38:21 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:38:31 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Jun 28 14:38:41 pve13 pvestatd[1495]: ignoring custom ceph config for storage 'CephData', 'monhost' is not set (assuming pveceph managed cluster)!

Currently everything is operating as normal.