I've been chasing a ghost since upgrading to proxmox 8.3 (correlation is not causation, so I can't point to 8.3 just yet). Random LXC containers start to exhibit i/o errors. This is only "noticed" when a nightly backup runs as it then errors, but fails on those specific LXC's. The storage is local, on ZFS. ZFS scrub are clean, no checksum or i/o errors. The backup will tell me which file is giving the i/o error. If I go into the container and try to read the file, I get the same i/o error. Intially I thought it's a disk error, but ZFS is not complaining, smart status is fine with no media or read errors. I restored the container from backup and didn't dive deeper. Next day another LXC container started to have the same issue, and then another one. I am on my 3rd LXC container with this same issue. I finally started to dive a bit deeper, doing scrubs, etc. Could not find anything. Since LXC containers on ZFS are just volumes, I can go on the host and navigate around. The same file that gives the I/o error from inside the LXC container reads just fine from the host. The issue seems to be isolated to just the proxmox services and the from the processes in the lxc container itself. On my other containers that had this issue, random files start to have i/o error. I know this seems like a disk error, but the fact that the file can be read from the host and that there are no zfs errors, nor Smart error leads me to believe that there might be something else going on. QEMU VMs on the same storage medium are not impacted. Has anyone seen this?

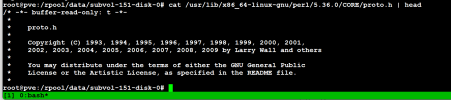

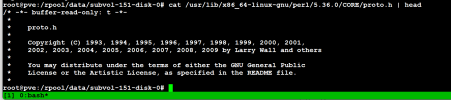

I/O error

File is actually readable from the host perspective. No I/O errors if interacted with any of the data having errors from inside the LXC or that the backup complains about. If I try reading this same file from inside the LXC container, I get an i/o error.

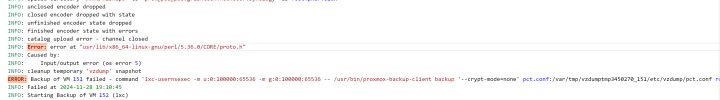

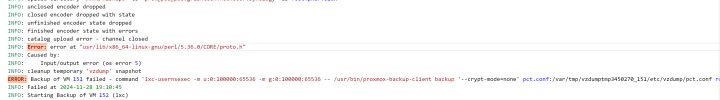

I/O error

File is actually readable from the host perspective. No I/O errors if interacted with any of the data having errors from inside the LXC or that the backup complains about. If I try reading this same file from inside the LXC container, I get an i/o error.