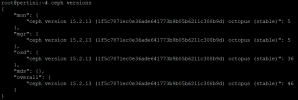

Hi! Last night we upgraded our production 9-nodes cluster from PVE 5.4 to PVE 6.4 and from Ceph 12 to 14 and then to 15 (Octopus), following the official tutorials. Everything went smoothly and all running VMs have been online during the upgrade, so we're very happy about the operation. Now the cluster and Ceph are all HEALTH_OK and stable, no rebalancing or recovery in process.

But our monitoring system (which is Zabbix-based) is telling us that the OSDs (which are all NVMe SSDs, 4 on each of the 9 nodes for a total of 36) frequently spike to 100% I/O activity. Analysing the data and comparing it with the data from a few days ago, we realised that although the bandwidth in reads and writes from VMs to Ceph and the IOPS are similar (the VMs have the same load they had a few days ago), the individual NVMe SSDs do a much higher number of writes and reads (by a factor of x50!)

I'm afraid that this will greatly accelerate the SSD wear process a lot and, under high VM load conditions, also slow down the performance (for now the client-side performance remains good, but August is not a busy month and the VMs are very underutilised).

Curiously, network traffic on the 10 Gb/s network dedicated to Ceph did not increase at all (and so which data is Ceph reading and writing continuously on the OSDs? Only transferring data between the OSDs of each single node? Maybe doing some kind of internal format conversion?)

Do you have any ideas? Thank you very much!

But our monitoring system (which is Zabbix-based) is telling us that the OSDs (which are all NVMe SSDs, 4 on each of the 9 nodes for a total of 36) frequently spike to 100% I/O activity. Analysing the data and comparing it with the data from a few days ago, we realised that although the bandwidth in reads and writes from VMs to Ceph and the IOPS are similar (the VMs have the same load they had a few days ago), the individual NVMe SSDs do a much higher number of writes and reads (by a factor of x50!)

I'm afraid that this will greatly accelerate the SSD wear process a lot and, under high VM load conditions, also slow down the performance (for now the client-side performance remains good, but August is not a busy month and the VMs are very underutilised).

Curiously, network traffic on the 10 Gb/s network dedicated to Ceph did not increase at all (and so which data is Ceph reading and writing continuously on the OSDs? Only transferring data between the OSDs of each single node? Maybe doing some kind of internal format conversion?)

Do you have any ideas? Thank you very much!

Last edited: