Hi @bbgeek17 I followed the blog on creating a OCFS2 file system, but it's not letting me creating VMs anymore. I have three PVE nodes; I think I created the cluster filesystem correctly, but not sure what I missed. Can you please provide more guidance?

Here is my cluster configurations. I created it via the o2cb tool:

And here is my multipath setup:

It looks like the cluster filesystem is seen by all three nodes:

I created the ocfs2 from node1, and I try to create a Directory storage at path /media/vmstore, so then I edited /etc/fstab file on node2 and node3, like this:

However when I try to create a VM I get error on not able to activate my storage because it's not mounted:

On node1 the ocfs2 seems to be mounted at /dlm:

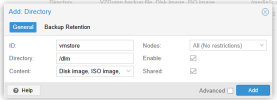

So does this mean I need to create the Directory storage to path /dlm and not just any path I want, like this? What do you think?

I will give it a try again, but wanted to run it by and see if you see anything out of place with my configurations. Thanks.

Here is my cluster configurations. I created it via the o2cb tool:

Code:

root@pve-node1:/etc/ocfs2# cat /etc/ocfs2/cluster.conf

cluster:

name = CSTLab

heartbeat_mode = local

node_count = 3

node:

cluster = CSTLab

number = 0

ip_port = 7777

ip_address = 10.xx.xx.xx

name = pve-node1

node:

cluster = CSTLab

number = 1

ip_port = 7777

ip_address = 10.xx.xx.xx

name = pve-node2

node:

cluster = CSTLab

number = 2

ip_port = 7777

ip_address = 10.xx.xx.xx

name = pve-node3And here is my multipath setup:

Code:

root@pve-node1:/etc/ocfs2# multipath -ll

mpath0 (3600c0ff000f823c079d2346701000000) dm-5 HPE,MSA 2060 FC

size=8.7T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 1:0:0:1 sdb 8:16 active ready running

| `- 2:0:1:1 sde 8:64 active ready running

`-+- policy='service-time 0' prio=10 status=enabled

|- 2:0:0:1 sdd 8:48 active ready running

`- 1:0:1:1 sdc 8:32 active ready running

root@pve-node1:/etc/ocfs2#It looks like the cluster filesystem is seen by all three nodes:

Code:

root@pve-node1:/etc/ocfs2# mounted.ocfs2 -d

Device Stack Cluster F UUID Label

/dev/sdb o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdc o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/mapper/mpath0 o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdd o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sde o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

Code:

root@pve-node2:~# mounted.ocfs2 -d

Device Stack Cluster F UUID Label

/dev/sdb o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdc o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/mapper/mpath0 o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdd o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sde o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

root@pve-node2:~#

Code:

root@pve-node3:/etc/ocfs2# mounted.ocfs2 -d

Device Stack Cluster F UUID Label

/dev/sdb o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdc o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/mapper/mpath0 o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sdd o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lve

/dev/sde o2cb 429616C8E41F452AB59C6B4B1381539B vmstore-lveI created the ocfs2 from node1, and I try to create a Directory storage at path /media/vmstore, so then I edited /etc/fstab file on node2 and node3, like this:

Code:

root@pve-node2:~# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/pve/root / ext4 errors=remount-ro 0 1

UUID=4235-CD21 /boot/efi vfat defaults 0 1

/dev/pve/swap none swap sw 0 0

proc /proc proc defaults 0 0

/dev/mapper/mpath0 /media/vmstore ocfs2 _netdev,nointr 0 0

root@pve-node2:~#

Code:

root@pve-node3:/etc/ocfs2# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/pve/root / ext4 errors=remount-ro 0 1

UUID=C5DE-8982 /boot/efi vfat defaults 0 1

/dev/pve/swap none swap sw 0 0

proc /proc proc defaults 0 0

/dev/mapper/mpath0 /media/vmstore ocfs2 _netdev,nointr 0 0

root@pve-node3:/etc/ocfs2#However when I try to create a VM I get error on not able to activate my storage because it's not mounted:

On node1 the ocfs2 seems to be mounted at /dlm:

Code:

root@pve-node1:/etc/ocfs2# mount -l | grep ocfs2

ocfs2_dlmfs on /dlm type ocfs2_dlmfs (rw,relatime)So does this mean I need to create the Directory storage to path /dlm and not just any path I want, like this? What do you think?

I will give it a try again, but wanted to run it by and see if you see anything out of place with my configurations. Thanks.

Last edited: