Anyone have any specific instructions on how to move ceph-mon directory to new partition post-proxmox install in default?

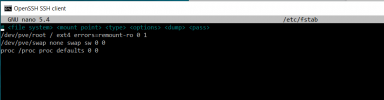

I have 80GB SSD for OS and PM and it seems to fill up with basic OS and PM updates and causes ceph to go into warn/critical shutdown even with space left on the drive due to some underlying "safety" config defaults that shut down ceph mon and pool when low on disk space below a certain percentage of the partition it lives on... irregardless of the actual space in MB or GB left... seems no bueno... anyhow I have a pile of extra 80GB SSD's from server pulls and would like to just add another drive to the failing nodes and migrate the ceph only services to that new ssd/hdd partition since the main OS one is running low.

Seems that anything - like logs and other services on pve root partition - can fill up the drive to a certain level - especially with distribution updates and PM update packages that wreck my pool each time...

CEPH man suggested 60GB dedicated mon partition per mon per node...

so Q: What is best way to add new drive, and dedicate it to ceph?

I have 80GB SSD for OS and PM and it seems to fill up with basic OS and PM updates and causes ceph to go into warn/critical shutdown even with space left on the drive due to some underlying "safety" config defaults that shut down ceph mon and pool when low on disk space below a certain percentage of the partition it lives on... irregardless of the actual space in MB or GB left... seems no bueno... anyhow I have a pile of extra 80GB SSD's from server pulls and would like to just add another drive to the failing nodes and migrate the ceph only services to that new ssd/hdd partition since the main OS one is running low.

Seems that anything - like logs and other services on pve root partition - can fill up the drive to a certain level - especially with distribution updates and PM update packages that wreck my pool each time...

CEPH man suggested 60GB dedicated mon partition per mon per node...

so Q: What is best way to add new drive, and dedicate it to ceph?