Backup Size - Size of the backup ignoring dedup: sum of all the chunks in use by the backup. This would be a more appropriate size to be returned to Proxmox VE, rather than a useless Virtual HD size definition.

I agree that the VHD size is not really useful, but this metric is hard to gather due to the deduplication. PVE / proxmox-backup-client would need to measure how much (non-zero) data was actually read and provide this metric to PBS, because PBS is unable(?) to calculate this on its own as the deduplicated pool is shared across backups.

And what about dirty-bitmaps? That'd ruin this metric instantly, because the backup client does not need to read everything.

Maybe some tricky math can still lead to a useful value, but still... makes things even more complicate.

Delta Size - Size of the delta at the time of the backup: new chunks written.

Assuming there is an existing backup, this would be rather easy to calculate then.

But counting the amounts of new chunks written by PBS would be another extra metric, because PBS only writes new chunks if the pool does not already have it stored - which means no other backup already created that chunk.

Thats also making this metric kinda useless, because other backups could also reference the same chunks in the future and therefore report way less "new chunks written" in its backup job, which makes the "new chunks written" value a heavily misleading information imho.

I'd prefer something like the cumulated size of all chunks referenced by an backup as additional "size" metric - this could provide a potentially more accurate number and on top, PBS can calculate *and update* this value on itself and does not rely on valid input data from backup clients to maintain those metrics.

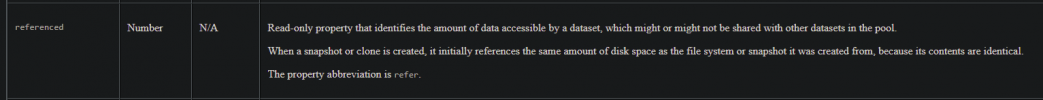

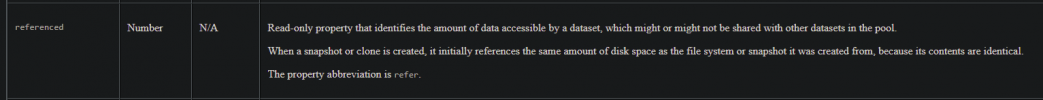

Something like the "referenced" value that ZFS provides.

Image is from

https://docs.oracle.com/cd/E19253-01/819-5461/gazss/index.html

@dcsapak What do you think about this? Is something like that practicable?

On a sidenote:

When calculating the referenced size of the backup, it'd be interesting to have ZFS compression ignored (or additionally shown) because a high compression rate could also let the backup look way smaller than it actually is.

For example, I have a recordsize of 4M and using zstd I get usual compression ratios between x2.0 and x3.0.

IIRC there was some way to read the uncompressed size of a file, ignoring ZFS' transparent compression and showing the original filesize and there was some way to read the filesize that ZFS reports, which is smaller if file is compressed.

Same applies for PBS built-in compression, that'd also change the displayed size.

I think a "referenced" size would be useful for guessing the required time to restore the VM.

If i have a 2TB VHD, will it take a minute to restore because it is 99% zeroes (which will restore extremely fast on compressing storage like ZFS or Ceph) or will it take like hours because there are actually tons of data inside?