Hi,

I'm just looking for some clarity and whether or not i'm thinking down the right path.

We have a Server which is got 5 different virtual machines, these are:

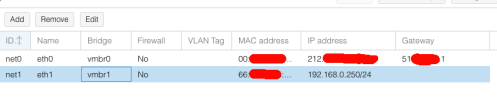

I'm now getting onto doing the DNS for the container and from previous examples we have, the DNS is pointing to an IP that is external (200... failover ip address) which is assigned to the NGINX container which should mean the NGINX container is accessible to the internet.

Question: Is it best practise here to get a failover IP or can I just use port forwarding i.e: in DNS set the A record to be the Main IP of the server and then use some port forwarding for the ip so that any traffic from the port 80 or 443 to be redirected to my NGINX container or is it just best to get a failover IP and then

Thanks for you any help

TLDR; In DNS, the A record has be an external IP, is it best to just buy a failover IP Address and assign it to my NGINX container OR is it best to use my Server's IP Address as the A record and then port forward for 80 and 443 to my NGINX container.

I'm just looking for some clarity and whether or not i'm thinking down the right path.

We have a Server which is got 5 different virtual machines, these are:

- NGinx

- Database

- Search

- Redis

- The Code (Server)

I'm now getting onto doing the DNS for the container and from previous examples we have, the DNS is pointing to an IP that is external (200... failover ip address) which is assigned to the NGINX container which should mean the NGINX container is accessible to the internet.

Question: Is it best practise here to get a failover IP or can I just use port forwarding i.e: in DNS set the A record to be the Main IP of the server and then use some port forwarding for the ip so that any traffic from the port 80 or 443 to be redirected to my NGINX container or is it just best to get a failover IP and then

Thanks for you any help

TLDR; In DNS, the A record has be an external IP, is it best to just buy a failover IP Address and assign it to my NGINX container OR is it best to use my Server's IP Address as the A record and then port forward for 80 and 443 to my NGINX container.