Hello, I have a high IO delay issue reaching a peak of 25% that has been bothering me for quite some time. I've seen a few threads about it but none of them really solved my issue.

I've noticed that I experience it under these conditions:

My specs are as follows:

I've noticed that I experience it under these conditions:

- Copying/moving files within a Windows 11 VM.

- Installing MacOS in a VM.

- Cloning a VM through Proxmox web admin.

My specs are as follows:

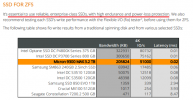

- Supermicro X11SPI-TF

- Intel Xeon Silver 4210T (10c/20t) Cascade Lake 2.3/3.2 GHz 95 W

- 224 GB DDR4 2400 ECC LRDIMM

- 2x Inland Professional 512 GB SSD - Mirrored

- Proxmox VE 7.3-3