Hello,

I recently built my new "server" for my home, and as the title states, experiencing very bad IO performance. The system is configured to have 2 SSDs as a ZFS mirror for the system itself (rpool) and has 4 more spinning disks of which again 2 are configured as a ZFS mirror.

The hardware of the system is as follows:

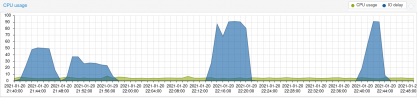

During install I selected to use ZFS and configured the two SSDs as RAID1 without error. I was also able to import each volume without issues, and it seems everything is working, only the performance of the SSDs is unbelievably bad. Making a backup of my MySQL server with about 250MB of data takes in the order of 10 minutes (read from SSD and write to SSD) and IO wait is about 30-35% all the time (till the backup is finished). The actual CPU load is in the order of 4-5% and RAM is about 85-90% without swapping anything. I tried shutting down all the guests (3 containers and 1 VM) but performance is pretty much unaffected. Thus, I think I can exclude my workload (Home Assistant and Plex) to be the reason for the clogged-up system which btw. was running fine on an Intel Celeron before.

To show some data about my ZFS settings I ran the following commands:

Note: I also tried removing one disk from the pool, but performance did not improve (or change at all to be honest). Only adding the disk to the pool again took like 36 hours of resilvering the data.

I did run smartctl, but it didn't report any issues and SSD wear also seems to be ok.

and for the second disk results are very similar, as both disks were new at the time of assembly.

I read (https://jrs-s.net/2018/03/13/zvol-vs-qcow2-with-kvm/) that ZFS can be best benchmarked by using a tool named ifo (which I never heard of before). But I run the recommended commands and thought maybe it helps someone to understand my issue if I copy the output here. The tests have been run with all guests turned off and a fresh rebooted system.

The test I ran were synchronous 4K writes:

Command: fio --name=random-write --ioengine=libaio --iodepth=4 --rw=randwrite --bs=4k --direct=0 --size=256m --numjobs=4 --end_fsync=1

On the SSD it printed the following results:

Note: During this test I observed IO waits of up 99.15% on the Proxmox summary page while my CPU load was around 1%.

While running the same test on the HDD mirror was way way faster and yielded the following results:

Note: During this test IO waits topped at 13% in the summary page.

Based on this results, it seems my SSD is 20x slower that my HDD? Can this be true, are they really that bad?

Any advice is very much appreciated!

Cheers,

Ralph

PS: Since this is my first post, I also like to say a big thank you to the people developing Proxmox VE - amazing job!

PPS: The full output of the commands is attached in a separate file. If you need any information, I'll be happy to provide what ever you need.

I recently built my new "server" for my home, and as the title states, experiencing very bad IO performance. The system is configured to have 2 SSDs as a ZFS mirror for the system itself (rpool) and has 4 more spinning disks of which again 2 are configured as a ZFS mirror.

The hardware of the system is as follows:

- CPU: Intel i5-4440 (4 cores) on a Gigabyte H97-D3H-CF with all RAID features disabled

- RAM: 16 GB (non-ECC, Kingston)

- 2 SSDS: Crucial BX500 (1TB) in ZFS Mirror (https://www.crucial.com/ssd/bx500/ct1000bx500ssd1)

- 2 HDD: WED RED 4TB WD40EFAZ again in a ZFS mirror

- 2 HDD: WD RED 4TB each a separate ZFS volume

During install I selected to use ZFS and configured the two SSDs as RAID1 without error. I was also able to import each volume without issues, and it seems everything is working, only the performance of the SSDs is unbelievably bad. Making a backup of my MySQL server with about 250MB of data takes in the order of 10 minutes (read from SSD and write to SSD) and IO wait is about 30-35% all the time (till the backup is finished). The actual CPU load is in the order of 4-5% and RAM is about 85-90% without swapping anything. I tried shutting down all the guests (3 containers and 1 VM) but performance is pretty much unaffected. Thus, I think I can exclude my workload (Home Assistant and Plex) to be the reason for the clogged-up system which btw. was running fine on an Intel Celeron before.

To show some data about my ZFS settings I ran the following commands:

Code:

root@duckburg:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

media1 3.62T 2.40T 1.23T - - 0% 66% 1.00x ONLINE -

media2 3.62T 1.47T 2.16T - - 0% 40% 1.00x ONLINE -

rpool 928G 515G 413G - - 33% 55% 1.00x ONLINE -

zfs-data 2.72T 426G 2.30T - - 0% 15% 1.00x ONLINE -

root@duckburg:~# zpool status rpool

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 00:31:52 with 0 errors on Sun Jan 10 00:55:57 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-CT1000BX500SSD1_1951E230BD67-part3 ONLINE 0 0 0

ata-CT1000BX500SSD1_2012E295BD75-part3 ONLINE 0 0 0

errors: No known data errors

Code:

root@duckburg:~# zpool get all | grep ashift

media1 ashift 12 local

media2 ashift 12 local

rpool ashift 12 local

zfs-data ashift 12 local

Code:

root@duckburg:~# zpool get all rpool

NAME PROPERTY VALUE SOURCE

rpool size 928G -

rpool capacity 55% -

rpool altroot - default

rpool health ONLINE -

rpool guid 17740624895035484119 -

rpool version - default

rpool bootfs rpool/ROOT/pve-1 local

rpool delegation on default

rpool autoreplace off default

rpool cachefile - default

rpool failmode wait default

rpool listsnapshots off default

rpool autoexpand off default

rpool dedupditto 0 default

rpool dedupratio 1.00x -

rpool free 413G -

rpool allocated 515G -

rpool readonly off -

rpool ashift 12 local

rpool comment - default

rpool expandsize - -

rpool freeing 0 -

rpool fragmentation 33% -

rpool leaked 0 -

rpool multihost off default

rpool checkpoint - -

rpool load_guid 5700784901473667627 -

rpool autotrim off default

rpool feature@async_destroy enabled local

rpool feature@empty_bpobj active local

rpool feature@lz4_compress active local

rpool feature@multi_vdev_crash_dump enabled local

rpool feature@spacemap_histogram active local

rpool feature@enabled_txg active local

rpool feature@hole_birth active local

rpool feature@extensible_dataset active local

rpool feature@embedded_data active local

rpool feature@bookmarks enabled local

rpool feature@filesystem_limits enabled local

rpool feature@large_blocks enabled local

rpool feature@large_dnode enabled local

rpool feature@sha512 enabled local

rpool feature@skein enabled local

rpool feature@edonr enabled local

rpool feature@userobj_accounting active local

rpool feature@encryption enabled local

rpool feature@project_quota active local

rpool feature@device_removal enabled local

rpool feature@obsolete_counts enabled local

rpool feature@zpool_checkpoint enabled local

rpool feature@spacemap_v2 active local

rpool feature@allocation_classes enabled local

rpool feature@resilver_defer enabled local

rpool feature@bookmark_v2 enabled localNote: I also tried removing one disk from the pool, but performance did not improve (or change at all to be honest). Only adding the disk to the pool again took like 36 hours of resilvering the data.

I did run smartctl, but it didn't report any issues and SSD wear also seems to be ok.

Code:

root@duckburg:/zfs-data# smartctl -a /dev/sde

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.78-2-pve] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Crucial/Micron Client SSDs

Device Model: CT1000BX500SSD1

[...]

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

[...]

Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 18

171 Program_Fail_Count 0x0032 100 100 000 Old_age Always - 0

172 Erase_Fail_Count 0x0032 100 100 000 Old_age Always - 0

173 Ave_Block-Erase_Count 0x0032 087 087 000 Old_age Always - 202

174 Unexpect_Power_Loss_Ct 0x0032 100 100 000 Old_age Always - 10

180 Unused_Reserve_NAND_Blk 0x0033 100 100 000 Pre-fail Always - 13

183 SATA_Interfac_Downshift 0x0032 100 100 000 Old_age Always - 0

184 Error_Correction_Count 0x0032 100 100 000 Old_age Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

194 Temperature_Celsius 0x0022 065 048 000 Old_age Always - 35 (Min/Max 25/52)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_ECC_Cnt 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 100 100 000 Old_age Always - 0

202 Percent_Lifetime_Remain 0x0030 087 087 001 Old_age Offline - 13

206 Write_Error_Rate 0x000e 100 100 000 Old_age Always - 0

210 Success_RAIN_Recov_Cnt 0x0032 100 100 000 Old_age Always - 0

246 Total_LBAs_Written 0x0032 100 100 000 Old_age Always - 63437345129

247 Host_Program_Page_Count 0x0032 100 100 000 Old_age Always - 1982417035

248 FTL_Program_Page_Count 0x0032 100 100 000 Old_age Always - 13068894592

249 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 0

250 Read_Error_Retry_Rate 0x0032 100 100 000 Old_age Always - 923940

251 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 32

252 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 0

253 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 0

254 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 209

223 Unkn_CrucialMicron_Attr 0x0032 100 100 000 Old_age Always - 10

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 4571 -

# 2 Extended offline Interrupted (host reset) 90% 4570 -

# 3 Short offline Interrupted (host reset) 90% 4569 -

Selective Self-tests/Logging not supportedand for the second disk results are very similar, as both disks were new at the time of assembly.

I read (https://jrs-s.net/2018/03/13/zvol-vs-qcow2-with-kvm/) that ZFS can be best benchmarked by using a tool named ifo (which I never heard of before). But I run the recommended commands and thought maybe it helps someone to understand my issue if I copy the output here. The tests have been run with all guests turned off and a fresh rebooted system.

The test I ran were synchronous 4K writes:

Command: fio --name=random-write --ioengine=libaio --iodepth=4 --rw=randwrite --bs=4k --direct=0 --size=256m --numjobs=4 --end_fsync=1

On the SSD it printed the following results:

Code:

Run status group 0 (all jobs):

WRITE: bw=2734KiB/s (2799kB/s), 683KiB/s-811KiB/s (700kB/s-831kB/s), io=1024MiB (1074MB), run=323169-383596msecWhile running the same test on the HDD mirror was way way faster and yielded the following results:

Code:

Run status group 0 (all jobs):

WRITE: bw=59.7MiB/s (62.6MB/s), 14.9MiB/s-14.9MiB/s (15.6MB/s-15.7MB/s), io=1024MiB (1074MB), run=17125-17162msecBased on this results, it seems my SSD is 20x slower that my HDD? Can this be true, are they really that bad?

Any advice is very much appreciated!

Cheers,

Ralph

PS: Since this is my first post, I also like to say a big thank you to the people developing Proxmox VE - amazing job!

PPS: The full output of the commands is attached in a separate file. If you need any information, I'll be happy to provide what ever you need.