Hello Proxmox community!

Our company uses Proxmox Virtual Environment and Proxmox Backup Server and have been noticing high I/O delay on our nodes while VM backups are running.

I'll list the relevant specs here at the top before I get into the details, as I will be referencing these later in the post.

Main VM storage NAS:

* Motherboard: Supermicro H12SSL-CT

* OS: TrueNAS-13.0-U6.1

* CPU: AMD EPYC 7443P (2.9 GHz base clock, 1 socket, 24 cores, 2 threads per core)

* RAM: 460 GB DDR4 ECC

* Networking: Mellanox Technologies MT27800 Family [ConnectX-5] 40 GBps

* Disks:

* Boot drive: HP MK000480GWCEV 480 GB SSD

* VM storage pool:

* RAIDZ3 vdev:

* 24x Seagate XS6400LE70084 6.4 TB HDD

* slog, mirror:

* 2x Samsung SSD 990 PRO 1TB

Proxmox node A (This node is part of a 5-node cluster. It is the most heavily used in terms of CPU).

* Motherboard: Supermicro H12DSi-NT6

* OS: Proxmox Virtual Environment 8.2.2

* CPU: AMD EPYC 7F32 8 core processor (3.70 GHz base clock, 2 sockets, 8 cores, 2 threads per core)

* RAM: 256 GB DDR4 ECC

PBS server (physical):

* Pre-built server: HPE Proliant DL20 Gen10

* OS: Proxmox Backup Server 3.2-6

* CPU: Intel(R) Xeon(R) E-2236 CPU (3.40GHz base clock, 1 socket, 6 cores, 2 threads per core)

* RAM: 64 GB DDR4 ECC

* Disks:

* OS: 1x Samsung SSD 860 PRO 256 GB

* Datastore: 20 TB zvol set up over iSCSI on the RAIDZ3 vdev on the main VM storage NAS (see above specs)

We use Proxmox Virtual Environment and Proxmox Backup Server and have been noticing high I/O delay on our nodes while VM backups are running. At first we felt as if there was a correlation between the I/O delay and the fact that one of our virtual machines, a Windows Server OS running Microsoft SQL Server, would slow to a crawl and need to be completely restarted. We've made a couple of changes since then, including making networking improvements to the destination NAS (a Synology NAS whose datastore was mounted in PBS as SMB, later changed to NFS), and flat out changing the destination dataset to another zvol on the main VM storage NAS (this is the current setup).

The main VM storage NAS has one pool that has separate zvols for each VM, and those zvols are shared out over iSCSI as separate LUNs. On the PVE side, we create LVMs on each shared LUN and store the VM disks inside those. All Windows VMs including the SQL Server one have SCSI disks set to write back caching, with discard, SSD emulation, and IO thread enabled. The aio is also set to threads due to being blocked from live migration from local storage to LVM per this commit: https://git.proxmox.com/?p=qemu-server.git;a=commit;h=8fbae1dc8f9041aaa1a1a021740a06d80e8415ed).

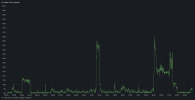

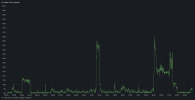

Attached below is a snapshot of what IO delay looks like during PBS backups for node A. It also goes up during backup jobs from our other backup system (Veeam). The PBS backups for this node start at 21:00 local time. The other 4 nodes are staggered in half hour increments after the previous node's backup. E.g. the start times are 21:00, 21:30, 22:00, 22:30, and 23:00 for the 5 nodes. At least for the night of 7/2/2024's backups, there is no overlap between node A's backups and the next node's backups.

This is a screenshot of the entire day on 7/2/2024

This is a screenshot of just the timeframe of the PBS backups on that same day:

My question here is, is this jump in I/O delay a concern? Is it a sign of deeper-rooted issues or a bottleneck somewhere? Again, there are no current visible issues as of now when PBS backups occur (e.g. no virtual machines hang), but I'd like to know if this is something worth investigating so we can prevent any potential issues before they occur.

Please let me know if there is any additional information that I can provide to assist.

Thanks in advance for the help!

Our company uses Proxmox Virtual Environment and Proxmox Backup Server and have been noticing high I/O delay on our nodes while VM backups are running.

I'll list the relevant specs here at the top before I get into the details, as I will be referencing these later in the post.

Main VM storage NAS:

* Motherboard: Supermicro H12SSL-CT

* OS: TrueNAS-13.0-U6.1

* CPU: AMD EPYC 7443P (2.9 GHz base clock, 1 socket, 24 cores, 2 threads per core)

* RAM: 460 GB DDR4 ECC

* Networking: Mellanox Technologies MT27800 Family [ConnectX-5] 40 GBps

* Disks:

* Boot drive: HP MK000480GWCEV 480 GB SSD

* VM storage pool:

* RAIDZ3 vdev:

* 24x Seagate XS6400LE70084 6.4 TB HDD

* slog, mirror:

* 2x Samsung SSD 990 PRO 1TB

Proxmox node A (This node is part of a 5-node cluster. It is the most heavily used in terms of CPU).

* Motherboard: Supermicro H12DSi-NT6

* OS: Proxmox Virtual Environment 8.2.2

* CPU: AMD EPYC 7F32 8 core processor (3.70 GHz base clock, 2 sockets, 8 cores, 2 threads per core)

* RAM: 256 GB DDR4 ECC

PBS server (physical):

* Pre-built server: HPE Proliant DL20 Gen10

* OS: Proxmox Backup Server 3.2-6

* CPU: Intel(R) Xeon(R) E-2236 CPU (3.40GHz base clock, 1 socket, 6 cores, 2 threads per core)

* RAM: 64 GB DDR4 ECC

* Disks:

* OS: 1x Samsung SSD 860 PRO 256 GB

* Datastore: 20 TB zvol set up over iSCSI on the RAIDZ3 vdev on the main VM storage NAS (see above specs)

We use Proxmox Virtual Environment and Proxmox Backup Server and have been noticing high I/O delay on our nodes while VM backups are running. At first we felt as if there was a correlation between the I/O delay and the fact that one of our virtual machines, a Windows Server OS running Microsoft SQL Server, would slow to a crawl and need to be completely restarted. We've made a couple of changes since then, including making networking improvements to the destination NAS (a Synology NAS whose datastore was mounted in PBS as SMB, later changed to NFS), and flat out changing the destination dataset to another zvol on the main VM storage NAS (this is the current setup).

The main VM storage NAS has one pool that has separate zvols for each VM, and those zvols are shared out over iSCSI as separate LUNs. On the PVE side, we create LVMs on each shared LUN and store the VM disks inside those. All Windows VMs including the SQL Server one have SCSI disks set to write back caching, with discard, SSD emulation, and IO thread enabled. The aio is also set to threads due to being blocked from live migration from local storage to LVM per this commit: https://git.proxmox.com/?p=qemu-server.git;a=commit;h=8fbae1dc8f9041aaa1a1a021740a06d80e8415ed).

Attached below is a snapshot of what IO delay looks like during PBS backups for node A. It also goes up during backup jobs from our other backup system (Veeam). The PBS backups for this node start at 21:00 local time. The other 4 nodes are staggered in half hour increments after the previous node's backup. E.g. the start times are 21:00, 21:30, 22:00, 22:30, and 23:00 for the 5 nodes. At least for the night of 7/2/2024's backups, there is no overlap between node A's backups and the next node's backups.

This is a screenshot of the entire day on 7/2/2024

This is a screenshot of just the timeframe of the PBS backups on that same day:

My question here is, is this jump in I/O delay a concern? Is it a sign of deeper-rooted issues or a bottleneck somewhere? Again, there are no current visible issues as of now when PBS backups occur (e.g. no virtual machines hang), but I'd like to know if this is something worth investigating so we can prevent any potential issues before they occur.

Please let me know if there is any additional information that I can provide to assist.

Thanks in advance for the help!