Hi everyone,

Happy new year

I have begun to see a disturbing trend in both my Proxmox VE nodes, that the M2 disks are wearing out rather fast.

Both nodes are identitical in the terms of hardware and configuration.

6.2.16-12-pve

2 x Samsung SSD 980 Pro 2TB (Only one in use on each node for now) - and both are configured with ext4

The nodes was installed on 2023-09-09

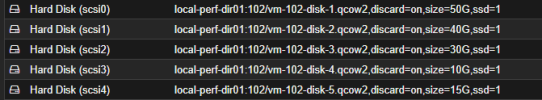

I will take the second node as the example (dk1pve02) - the VMs in the node is consuming about 500GB in total, and a lot of them are static.

Meaning that they will of course receive some updates, but my best guess is that we are talking 2-3GB per VM, per month.

When i look at the S.M.A.R.T info in PVE, it states that the disk is 2% weared out and a total of 8.82TB have been written.

On the 06-12-2023 that number was 6.40TB - so more that 2.4TB have been written since.

The 500GB 850 Pro disk is from a ESXi setup, that contained the same virtual machines - and over 4 years, it was 7% weared out.

I honestly cant figure out what is performing all these writes, but i am fairly sure its not my virtual machines - given that they do nada.

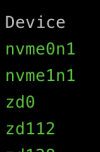

I have tried my best to find a solution my self, and have read all about not to use ZFS on consumer disks (which i am not), etc.

How can i determine, what is causing this load?

Happy new year

I have begun to see a disturbing trend in both my Proxmox VE nodes, that the M2 disks are wearing out rather fast.

Both nodes are identitical in the terms of hardware and configuration.

6.2.16-12-pve

2 x Samsung SSD 980 Pro 2TB (Only one in use on each node for now) - and both are configured with ext4

The nodes was installed on 2023-09-09

I will take the second node as the example (dk1pve02) - the VMs in the node is consuming about 500GB in total, and a lot of them are static.

Meaning that they will of course receive some updates, but my best guess is that we are talking 2-3GB per VM, per month.

When i look at the S.M.A.R.T info in PVE, it states that the disk is 2% weared out and a total of 8.82TB have been written.

On the 06-12-2023 that number was 6.40TB - so more that 2.4TB have been written since.

The 500GB 850 Pro disk is from a ESXi setup, that contained the same virtual machines - and over 4 years, it was 7% weared out.

I honestly cant figure out what is performing all these writes, but i am fairly sure its not my virtual machines - given that they do nada.

I have tried my best to find a solution my self, and have read all about not to use ZFS on consumer disks (which i am not), etc.

How can i determine, what is causing this load?