Thats correct, ZFS is for OS only. All VMs are running as LVM Thin.OP doesn't use ZFS for VM storage,

only PVE OS system boot is ZFS.

High CPU IO during DD testing on VMs

- Thread starter en4ble

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

@Dunuin no cache has poor performance with DD at such scale, any other cache (besides unsafe) that could increase performance for write on the VM?8MB/s is a lot of writes for a system disk that isn't storing any guests. 177 IOPS shouldn't be a problem for consumer SSDs even if those would be sync writes.

Yes, so basically what I wrote above about cache filling up and disks can't keep up writing it to disk. With caching set to "none" ZFS will still be write-caching, but only the last 5 seconds and then it will be flushed so data can't pile up in RAM that much that the system needs to wait because of overwhelmed disks.

Yes, virtio SCSI single is default and vest practice.

Last edited:

@_gabriel thank you for your reply. Its really hard for me to believe that 5x VMs with disk w/r throttle to 190/220(burst)MB/s struggles on WD SN850X at gen4 (sustained write speeds rated at Up to 6.6GBps ) with cache of 295GB.poor performance come from your not datacenter drives.

they lack sustained write capability, PVE writecache unsafe fake this lack for bursts only, when writecache must write down to disk while guest always write data, there is bottleneck.

dd test on those 5 VMs is trying to write 1GB of data where some will fail the 180MB/s bench minimum.

Wonder if there would be better performance with lxc containers (as they have direct access) but as I remember you cannot throttle disk on those (that I know of).

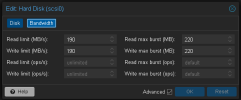

EDIT. OR could it be that my disk policy is incorrect (or could be improved)?!

Last edited:

Quick update. So Ive modified write to 250MB LIMIT and double the burst (500MB) with default (no cache) - definitely can see the improvement, where during the dd test (which runs 3 times btw on each vm) I can see first test being close to 500 burst and remaining 2 dd test at aprox 220-250MB range.

That being said I guess I don't understand the correlation between the LIMIT and MAX BURST because before 190/220 was acting horribly.

And since no cache, IO is non existent.

That being said I guess I don't understand the correlation between the LIMIT and MAX BURST because before 190/220 was acting horribly.

And since no cache, IO is non existent.

The keyword here is the "up to". 6.6 GB/s is when writing to DRAM-cache for some seconds. Once the DRAM-cache is full performance will drop to SLC-cache performance. Once the SLC-cache is full it will drop to TLC-NAND performance. And then its not unusual that such an SSD can't handle more than a few hundred MB/s (or with smaller QLC-NAND SSDs not even 100 MB/s). SSDs not designed for continous writes but bursts of writes will be very fast for a very short time and then suck.@_gabriel thank you for your reply. Its really hard for me to believe that 5x VMs with disk w/r throttle to 190/220(burst)MB/s struggles on WD SN850X at gen4 (sustained write speeds rated at Up to 6.6GBps ) with cache of 295GB.

I get that, its marketing bs, but we talking almost 300GB cache (The 1TB SN850X writes at over 6.4GBps for almost 46 seconds, showing a cache of around 295GB.) - where we maybe would write 15GB using DD(3x1gb per VM). Either way I believe there could also be some correlation with that LIMIT<>BURTST on the Disk Policies because of the behavior when I changed burst to 2x of LIMIT.The keyword here is the "up to". 6.6 GB/s is when writing to DRAM-cache for some seconds. Once the DRAM-cache is full performance will drop to SLC-cache performance. Once the SLC-cache is full it will drop to TLC-NAND performance. And then its not unusual that such an SSD can't handle more than a few hundred MB/s (or with smaller QLC-NAND SSDs not even 100 MB/s). SSDs not designed for continous writes but bursts of writes will be very fast for a very short time and then suck.

Last edited:

The problem is you can't monitor the embedded SLC Cache, you don't know when it's full or available.

Will be hard to be predictive.

Will be hard to be predictive.

True :/The problem is you can't monitor the embedded SLC Cache, you don't know when it's full or available.

Will be hard to be predictive.

Just a though: if you limit the IO bandwidth (below the actual limit of the hardware), would that not automatically increase IO delay as more processes are waiting for IO (to complete)?

Last edited:

very interesting, this could potentially explain the odd behavior.Just a through: if you limit the IO bandwidth (below the actual limit of the hardware), would that not automatically increase IO delay as more processes are waiting for IO (to complete)?