Hello,

Wondering if I could get some insight on my situation. This is a new build. During benchmarking on the VMs which uses DD test we can see unhealthy high IO. I have similar system without this behavior - only difference is this one is using ZFS(raid1 - dual wd red) for OS.

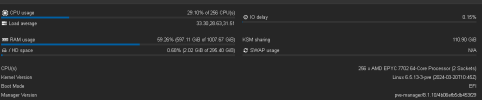

Little about the system:

On the chart we can see when DD starts kicking in the IO levels are concerning.

Apologize my ignorance but my question is could it be perhaps ZFS type for OS as the culprit?! If it is I'm confused why since the DD test does not touches OS drives but only dedicated NVMEs for the VMs.

Any other artifacts I could show to help with this issue?!

Thank You in advance for any assistance!

Wondering if I could get some insight on my situation. This is a new build. During benchmarking on the VMs which uses DD test we can see unhealthy high IO. I have similar system without this behavior - only difference is this one is using ZFS(raid1 - dual wd red) for OS.

Little about the system:

- dual epyc 7702

- OS as zfs(raid1) using two wd red SSDs NOT shared with VMs (dedicated)

- 80x VMs (2c/4t) sitting on evenly distributed 15 NVME drives (sn850x) - so about 5-6 VMs per drive

- all VM drives running at gen4 speed using LVM Thin (not ZFS)

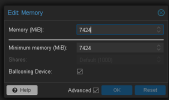

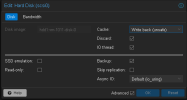

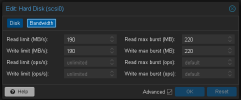

- each VM has disk i/o throttle setup for 250/300 (burst) with "unsafe" policy

On the chart we can see when DD starts kicking in the IO levels are concerning.

Apologize my ignorance but my question is could it be perhaps ZFS type for OS as the culprit?! If it is I'm confused why since the DD test does not touches OS drives but only dedicated NVMEs for the VMs.

Any other artifacts I could show to help with this issue?!

Thank You in advance for any assistance!

Last edited: