Hello, i've setup a ZFS RAID 1 with 2x4 SSDs. One Crucial one WD to store my VMs on it. It's a 64gig machine with an AMD R9 and i got some issues

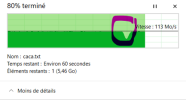

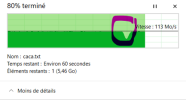

For testing i've used an samba share but basically when uploading a large file it works fine ( here at 1/GBS ) and after like some time it basically hang and drop the bandwidth for like 30-60s then it goes back to 1/GBS as shown in the screenshot. When the drop happen basically every VMs freeze and the IO Delay is like 50%, I've read up about ARC cache and i've set it to 12GB of RAM. It fixed the speed but not the IO Delay/Hang issue.

For testing i've used an samba share but basically when uploading a large file it works fine ( here at 1/GBS ) and after like some time it basically hang and drop the bandwidth for like 30-60s then it goes back to 1/GBS as shown in the screenshot. When the drop happen basically every VMs freeze and the IO Delay is like 50%, I've read up about ARC cache and i've set it to 12GB of RAM. It fixed the speed but not the IO Delay/Hang issue.