Hi,

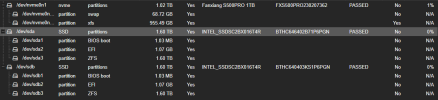

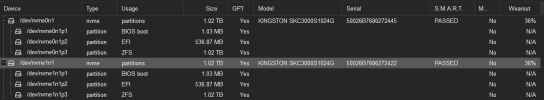

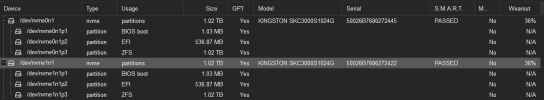

I noticed by chance today that my NVM SSDs have extremely high wearout. The two disks have been in use for a year and are already at 36%.

I have already searched the forum for similar problems, but couldn't find anything specific that could help me.

can someone help me get to the bottom of this and slow down the wearout.

I noticed by chance today that my NVM SSDs have extremely high wearout. The two disks have been in use for a year and are already at 36%.

I have already searched the forum for similar problems, but couldn't find anything specific that could help me.

can someone help me get to the bottom of this and slow down the wearout.

Code:

root@proxmox:~# zpool get all

NAME PROPERTY VALUE SOURCE

rpool size 952G -

rpool capacity 48% -

rpool altroot - default

rpool health ONLINE -

rpool guid 5390239608252388090 -

rpool version - default

rpool bootfs rpool/ROOT/pve-1 local

rpool delegation on default

rpool autoreplace off default

rpool cachefile - default

rpool failmode wait default

rpool listsnapshots off default

rpool autoexpand off default

rpool dedupratio 1.00x -

rpool free 494G -

rpool allocated 458G -

rpool readonly off -

rpool ashift 12 local

rpool comment - default

rpool expandsize - -

rpool freeing 0 -

rpool fragmentation 43% -

rpool leaked 0 -

rpool multihost off default

rpool checkpoint - -

rpool load_guid 14967033024946245314 -

rpool autotrim off default

rpool compatibility off default

rpool bcloneused 0 -

rpool bclonesaved 0 -

rpool bcloneratio 1.00x -

rpool feature@async_destroy enabled local

rpool feature@empty_bpobj active local

rpool feature@lz4_compress active local

rpool feature@multi_vdev_crash_dump enabled local

rpool feature@spacemap_histogram active local

rpool feature@enabled_txg active local

rpool feature@hole_birth active local

rpool feature@extensible_dataset active local

rpool feature@embedded_data active local

rpool feature@bookmarks enabled local

rpool feature@filesystem_limits enabled local

rpool feature@large_blocks enabled local

rpool feature@large_dnode enabled local

rpool feature@sha512 enabled local

rpool feature@skein enabled local

rpool feature@edonr enabled local

rpool feature@userobj_accounting active local

rpool feature@encryption enabled local

rpool feature@project_quota active local

rpool feature@device_removal enabled local

rpool feature@obsolete_counts enabled local

rpool feature@zpool_checkpoint enabled local

rpool feature@spacemap_v2 active local

rpool feature@allocation_classes enabled local

rpool feature@resilver_defer enabled local

rpool feature@bookmark_v2 enabled local

rpool feature@redaction_bookmarks enabled local

rpool feature@redacted_datasets enabled local

rpool feature@bookmark_written enabled local

rpool feature@log_spacemap active local

rpool feature@livelist enabled local

rpool feature@device_rebuild enabled local

rpool feature@zstd_compress enabled local

rpool feature@draid enabled local

rpool feature@zilsaxattr disabled local

rpool feature@head_errlog disabled local

rpool feature@blake3 disabled local

rpool feature@block_cloning disabled local

rpool feature@vdev_zaps_v2 disabled local

Last edited: