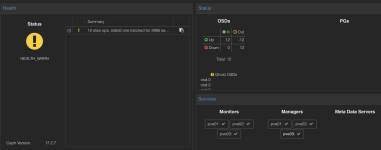

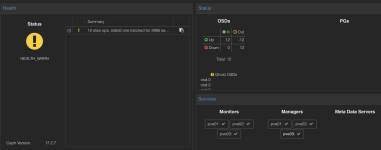

I tried to move my ceph network to another subnet and now all osd's are not picking up the new network and staying down. I fear i may have hosed ceph, but as a learning experience, would like to see if i can recover them. this is what they look like in the cluster:

this is the contents of the new ceph.conf

If i do a `ceph mon dump` it looks like the monitors are healthy and picked up the new addresses:

it looks like the ceph quorum (`ceph quorum_status`) is ok:

but when i look at the OSDs wiht `ceph osd tree`, they are all down:

if i try to look into one of the OSDs, it looks like they are not picking up the network:

in the journal i don't see much:

i've tried setting the via `ceph config set osd.8 public_addr 10.10.99.6:0`

and this is what the config (ceph config dump) looks like now:

here is the dump of the osds:

not sure what else to try... I am certain it is something relatively simple that i am missing, but to be honest i've been lookint at this for several hours and it seems that i am going around in circles. i do have backups if i need to reset the whole cluster, but I think it is recoverable and would be a great learning experience for me.

this is the contents of the new ceph.conf

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

fsid = b6005ee3-c72c-4fad-8c48-3b4be1a3cb07

mon_host = 10.10.99.6 10.10.99.7 10.10.99.8

mon_allow_pool_delete = true

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.10.99.0/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[client.crash]

keyring = /etc/pve/ceph/$cluster.$name.keyring

[mon.pve01]

public_addr = 10.10.99.6

[mon.pve02]

public_addr = 10.10.99.7

[mon.pve03]

public_addr = 10.10.99.8If i do a `ceph mon dump` it looks like the monitors are healthy and picked up the new addresses:

Code:

epoch 2

fsid b6005ee3-c72c-4fad-8c48-3b4be1a3cb07

last_changed 2025-02-22T11:16:32.323477-0500

created 2025-02-22T11:00:06.278940-0500

min_mon_release 17 (quincy)

election_strategy: 1

0: [v2:10.10.99.6:3300/0,v1:10.10.99.6:6789/0] mon.pve01

1: [v2:10.10.99.7:3300/0,v1:10.10.99.7:6789/0] mon.pve02

2: [v2:10.10.99.8:3300/0,v1:10.10.99.8:6789/0] mon.pve03

dumped monmap epoch 2it looks like the ceph quorum (`ceph quorum_status`) is ok:

Code:

{

"election_epoch": 50,

"quorum": [

0,

1,

2

],

"quorum_names": [

"pve01",

"pve02",

"pve03"

],

"quorum_leader_name": "pve01",

"quorum_age": 536,

"features": {

"quorum_con": "4540138320759226367",

"quorum_mon": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging",

"quincy"

]

},

"monmap": {

"epoch": 2,

"fsid": "b6005ee3-c72c-4fad-8c48-3b4be1a3cb07",

"modified": "2025-02-22T16:16:32.323477Z",

"created": "2025-02-22T16:00:06.278940Z",

"min_mon_release": 17,

"min_mon_release_name": "quincy",

"election_strategy": 1,

"disallowed_leaders: ": "",

"stretch_mode": false,

"tiebreaker_mon": "",

"removed_ranks: ": "",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging",

"quincy"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "pve01",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.10.99.6:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.10.99.6:6789",

"nonce": 0

}

]

},

"addr": "10.10.99.6:6789/0",

"public_addr": "10.10.99.6:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 1,

"name": "pve02",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.10.99.7:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.10.99.7:6789",

"nonce": 0

}

]

},

"addr": "10.10.99.7:6789/0",

"public_addr": "10.10.99.7:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 2,

"name": "pve03",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.10.99.8:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.10.99.8:6789",

"nonce": 0

}

]

},

"addr": "10.10.99.8:6789/0",

"public_addr": "10.10.99.8:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

}

]

}

}but when i look at the OSDs wiht `ceph osd tree`, they are all down:

Code:

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 10.47949 root default

-4 3.49316 host pve01

8 ssd 0.87329 osd.8 down 1.00000 1.00000

9 ssd 0.87329 osd.9 down 1.00000 1.00000

10 ssd 0.87329 osd.10 down 1.00000 1.00000

11 ssd 0.87329 osd.11 down 1.00000 1.00000

-3 3.49316 host pve02

0 ssd 0.87329 osd.0 down 1.00000 1.00000

4 ssd 0.87329 osd.4 down 1.00000 1.00000

12 ssd 0.87329 osd.12 down 1.00000 1.00000

13 ssd 0.87329 osd.13 down 1.00000 1.00000

-2 3.49316 host pve03

2 ssd 0.87329 osd.2 down 1.00000 1.00000

3 ssd 0.87329 osd.3 down 1.00000 1.00000

6 ssd 0.87329 osd.6 down 1.00000 1.00000

7 ssd 0.87329 osd.7 down 1.00000 1.00000if i try to look into one of the OSDs, it looks like they are not picking up the network:

Code:

{

"osd": 8,

"addrs": {

"addrvec": []

},

"osd_fsid": "57895705-d901-400b-a681-61077859a5ed",

"crush_location": {

"host": "pve01",

"root": "default"

}

}in the journal i don't see much:

Code:

Feb 23 10:13:18 pve01 systemd[1]: ceph-osd@8.service: Deactivated successfully.

Feb 23 10:13:18 pve01 systemd[1]: Stopped ceph-osd@8.service - Ceph object storage daemon osd.8.

Feb 23 10:13:18 pve01 systemd[1]: ceph-osd@8.service: Consumed 2.353s CPU time.

Feb 23 10:13:18 pve01 systemd[1]: Starting ceph-osd@8.service - Ceph object storage daemon osd.8...

Feb 23 10:13:18 pve01 systemd[1]: Started ceph-osd@8.service - Ceph object storage daemon osd.8.

Feb 23 10:13:18 pve01 ceph-osd[2487834]: 2025-02-23T10:13:18.757-0500 744e2f7b13c0 -1 Falling back to public interface

Feb 23 10:13:22 pve01 ceph-osd[2487834]: 2025-02-23T10:13:22.265-0500 744e2f7b13c0 -1 osd.8 9061 log_to_monitors true

Feb 23 10:13:23 pve01 ceph-osd[2487834]: 2025-02-23T10:13:23.256-0500 744e210006c0 -1 osd.8 9061 set_numa_affinity unable to identify public interface '' numa node: (2) No such file or directoryi've tried setting the via `ceph config set osd.8 public_addr 10.10.99.6:0`

and this is what the config (ceph config dump) looks like now:

Code:

WHO MASK LEVEL OPTION VALUE RO

mon advanced auth_allow_insecure_global_id_reclaim false

osd advanced public_network 10.10.99.0/24 *

osd advanced public_network_interface vlan99 *

osd.0 basic osd_mclock_max_capacity_iops_ssd 55377.221418

osd.0 advanced public_bind_addr v2:10.10.99.7:0/0 *

osd.10 basic osd_mclock_max_capacity_iops_ssd 42485.869165

osd.10 basic public_addr v2:10.10.99.6:0/0 *

osd.11 basic osd_mclock_max_capacity_iops_ssd 43151.652267

osd.11 basic public_addr v2:10.10.99.6:0/0 *

osd.12 basic osd_mclock_max_capacity_iops_ssd 62003.970817

osd.12 advanced public_bind_addr v2:10.10.99.7:0/0 *

osd.13 basic osd_mclock_max_capacity_iops_ssd 58325.014080

osd.13 advanced public_bind_addr v2:10.10.99.7:0/0 *

osd.2 basic osd_mclock_max_capacity_iops_ssd 67670.304502

osd.2 advanced public_bind_addr v2:10.10.99.8:0/0 *

osd.3 basic osd_mclock_max_capacity_iops_ssd 53038.204249

osd.3 advanced public_bind_addr v2:10.10.99.8:0/0 *

osd.4 basic osd_mclock_max_capacity_iops_ssd 63817.463674

osd.4 advanced public_bind_addr v2:10.10.99.7:0/0 *

osd.6 basic osd_mclock_max_capacity_iops_ssd 60684.431791

osd.6 advanced public_bind_addr v2:10.10.99.8:0/0 *

osd.7 basic osd_mclock_max_capacity_iops_ssd 52488.209705

osd.7 advanced public_bind_addr v2:10.10.99.8:0/0 *

osd.8 basic osd_mclock_max_capacity_iops_ssd 44762.641171

osd.8 basic public_addr v2:10.10.99.6:0/0 *

osd.8 advanced public_bind_addr v2:10.10.99.6:0/0 *

osd.9 basic osd_mclock_max_capacity_iops_ssd 42679.547164

osd.9 basic public_addr v2:10.10.99.6:0/0 *here is the dump of the osds:

Code:

osd.0 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new 7e86ab26-fabf-44cd-a88c-8112c91b12f8

osd.2 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new dc3cd8d5-d094-429c-86da-080aa14d3091

osd.3 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new a4e08ff6-9a45-4e58-9435-bec4acd78b7a

osd.4 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new b9c80f90-f4e8-4d4b-8beb-c1fe95292786

osd.6 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new bb269c34-5b25-453f-b7a1-98b271d1e961

osd.7 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new dea54476-b602-4d1e-bafb-f6d13a35a69d

osd.8 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new 57895705-d901-400b-a681-61077859a5ed

osd.9 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new fdb7aece-4bfb-4803-926a-0a53cbe8fc14

osd.10 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new 9629d126-23c4-4b1a-9451-8d6853851e0f

osd.11 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new cc6fc173-0e28-4c00-9540-0a43e39ccf65

osd.12 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new 278152ba-c85c-418c-b935-b50cd5c200d0

osd.13 down in weight 1 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) exists,new 2b6245b0-657d-46d5-b327-f53b0a86f073not sure what else to try... I am certain it is something relatively simple that i am missing, but to be honest i've been lookint at this for several hours and it seems that i am going around in circles. i do have backups if i need to reset the whole cluster, but I think it is recoverable and would be a great learning experience for me.

Last edited: