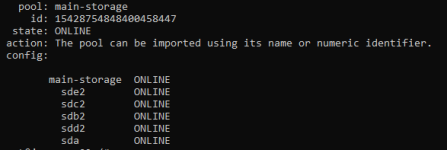

I am running an Ubuntu 18.04 VM which the install disk is on my PVE server local storage. I am trying to do pass-through of the hard drives on the PVE server (not the install SSD) to give the VM direct access to the storage and zfs management of the disks. The disks actually came from a FreeNAS pool, but that's not the issue. From the PVE server running Buster, I can import the ZFS pool without a problem:

After I export from the PVE server, and try to import from the Ubuntu VM, I see this:

What I believe is the probable cause is that the Ubuntu VM is not seeing all the partitions. Here is an lsblk from the PVE server (notice all the 4TB disks have 2 partitions from ZFS):

Now notice the lack of partitions from the same command from the VM:

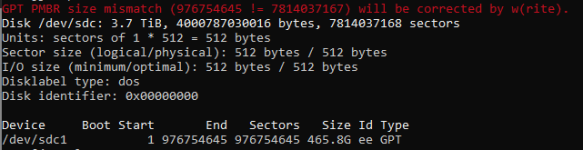

I also ran an fdisk -l /dev/sdc just to see if it is lsblk messing up but I get this:

Anyone familiar on how to fix this and get the VM to see the partitions on those two disks in order to import the ZFS pool?

*Edit: I am also aware that I should add the disks by-id as stated here: https://pve.proxmox.com/wiki/Physical_disk_to_kvm

I have done this and triple checked that correct ID's were passed through (and not just single partitions).

After I export from the PVE server, and try to import from the Ubuntu VM, I see this:

What I believe is the probable cause is that the Ubuntu VM is not seeing all the partitions. Here is an lsblk from the PVE server (notice all the 4TB disks have 2 partitions from ZFS):

Now notice the lack of partitions from the same command from the VM:

I also ran an fdisk -l /dev/sdc just to see if it is lsblk messing up but I get this:

Anyone familiar on how to fix this and get the VM to see the partitions on those two disks in order to import the ZFS pool?

*Edit: I am also aware that I should add the disks by-id as stated here: https://pve.proxmox.com/wiki/Physical_disk_to_kvm

I have done this and triple checked that correct ID's were passed through (and not just single partitions).

Last edited: