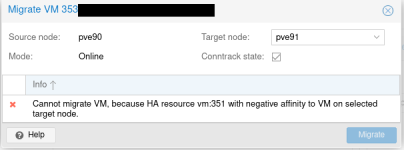

HA node affinity offers the strict option to specify whether a resource requires the condition, or (not strict) prefers it. The new HA resource affinities, especially negative, should offer it as well.

With an example load of 3 VMs running the same application, there is preference (not requirement!) it to be distributed across 3 nodes (in a 3-node cluster). With strict policy (the only implemented), placing a node in maintenance mode still leaves the example VM on it. Node changes/reboots require disabling+re-enabling the affinity rule, running 4 nodes, or manually migrating the resource. I found that manually migrating does nothing (HA task OK, actual migration does not happen), until the affinity rule is disabled.

An additional feature request: distribute by resource count within a group. 6 VMs on 3 nodes, in a normal setting 2 VMs per node.

Thank you for the cattle project, I've been able to reduce my custom tooling scripts for everyday tasks one step at a time.

With an example load of 3 VMs running the same application, there is preference (not requirement!) it to be distributed across 3 nodes (in a 3-node cluster). With strict policy (the only implemented), placing a node in maintenance mode still leaves the example VM on it. Node changes/reboots require disabling+re-enabling the affinity rule, running 4 nodes, or manually migrating the resource. I found that manually migrating does nothing (HA task OK, actual migration does not happen), until the affinity rule is disabled.

An additional feature request: distribute by resource count within a group. 6 VMs on 3 nodes, in a normal setting 2 VMs per node.

Thank you for the cattle project, I've been able to reduce my custom tooling scripts for everyday tasks one step at a time.

Last edited: