Something went terribly wrong last night.

Apparently one host lost connectivity (because of backup) and HA decided to move the LXC client to the first host on DataCenter list (which does not have same storage.)

Entire LXC client just went poof in the air.

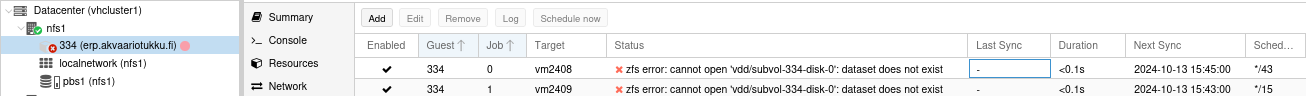

This is very odd because HA was configured to move the server to vm2409 host. nfs1 is not even configured in HA.

At the same time one other Proxmox host (vm2408) that used to be in HA group got messed up.

That is correct IP but we do not use SHA.

I have not yet had time to look in to that.

Fortunatelly we have PBS up and running.

Apparently one host lost connectivity (because of backup) and HA decided to move the LXC client to the first host on DataCenter list (which does not have same storage.)

Entire LXC client just went poof in the air.

Code:

Oct 13 00:00:17 TASK ERROR: could not activate storage 'pbs1': pbs1: error fetching datastores - 500 Can't connect to backup.ic4.eu:8007 (SSL connect attempt failed error:0A00010B:SSL routines::wrong version number)

Oct 13 02:20:40 vm2407 pvescheduler[220229]: command 'zfs destroy vdd/subvol-334-disk-0@__replicate_334-1_1728774916__' failed: got timeout

Oct 13 02:21:06 vm2407 pvescheduler[220229]: 334-1: got unexpected replication job error - command 'zfs snapshot vdd/subvol-334-disk-0@__replicate_334-1_1728775216__' failed: got timeout

# This is so wierd... vm2405 was removed from Cluster several months ago!!!

Oct 13 02:21:06 vm2407 postfix/cleanup[222662]: B914861AFD: message-id=<20241012232106.B914861AFD@vm2405.ic4.eu>

Oct 13 02:21:07 vm2407 postfix/qmgr[1265]: B914861AFD: from=<root@vm2405.ic4.eu>, size=1500, nrcpt=1 (queue active)

Oct 13 02:43:52 vm2407 pvescheduler[290076]: 334-0: got unexpected replication job error - command 'zfs snapshot vdd/subvol-334-disk-0@__replicate_334-0_1728776597__' failed: got timeout

Oct 13 02:59:10 vm2407 pvescheduler[331694]: 150-0: got unexpected replication job error - command 'zfs snapshot vdd/subvol-150-disk-0@__replicate_150-0_1728777439__' failed: got timeout

# Here the HA clients are destroyed...

Oct 13 03:02:15 vm2407 pvescheduler[331694]: command 'zfs destroy vdd/subvol-334-disk-0@__replicate_334-0_1728772993__' failed: got timeout

Oct 13 03:04:18 vm2407 pvescheduler[358114]: command 'zfs destroy vdd/subvol-150-disk-0@__replicate_150-0_1728777439__' failed: got timeout

Oct 13 03:04:56 vm2407 pvescheduler[358114]: 150-0: got unexpected replication job error - command 'zfs snapshot vdd/subvol-150-disk-0@__replicate_150-0_1728777799__' failed: got timeout

Oct 13 03:16:21 vm2407 kernel: INFO: task txg_sync:695 blocked for more than 122 seconds.

Oct 13 03:16:22 vm2407 kernel: Tainted: P O 6.8.12-2-pve #1

Oct 13 03:16:22 vm2407 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Oct 13 03:16:22 vm2407 kernel: task:txg_sync state:D stack:0 pid:695 tgid:695 ppid:2 flags:0x00004000

Oct 13 03:16:22 vm2407 kernel: Call Trace:

Oct 13 03:16:22 vm2407 kernel: <TASK>

Oct 13 03:16:22 vm2407 kernel: __schedule+0x401/0x15e0

Oct 13 03:16:22 vm2407 kernel: schedule+0x33/0x110

Oct 13 03:16:22 vm2407 kernel: schedule_timeout+0x95/0x170

Oct 13 03:16:22 vm2407 kernel: ? __pfx_process_timeout+0x10/0x10

Oct 13 03:16:22 vm2407 kernel: io_schedule_timeout+0x51/0x80

task started by HA resource agent

2024-10-13 03:42:16 ERROR: migration aborted (duration 00:00:00): storage 'vdd' is not available on node 'nfs1'

TASK ERROR: migration abortedAt the same time one other Proxmox host (vm2408) that used to be in HA group got messed up.

Code:

All LXC client consoles say somthing like this...

The authenticity of host '2a00:1190:c003:ffff::2408 (2a00:1190:c003:ffff::2408)' can't be established.

ED25519 key fingerprint is SHA256:WjyDBYlxd75QuFqWgXXHmwQozlE3P4S4u6trcxm+0UQ.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])?I have not yet had time to look in to that.

Fortunatelly we have PBS up and running.

Last edited: