Hello!

I've been running into a wall with passing through a GPU to my unprivileged LXC container for Jellyfin. I've followed numerous guides, and most recently this great guide on Reddit:

Proxmox GPU passthrough for Jellyfin LXC

Per u/thenickdude's comments, I skipped everything IOMMU related and started at the step:

After following the instructions, I get the following error when running

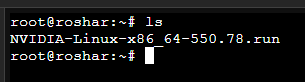

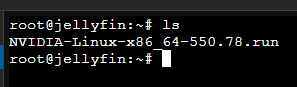

I have ensured I've got the same driver version installed in both my host and my container:

Here is the output of various files included in the installation guide:

On Proxmox Host

On Proxmox Host

On Proxmox Host

On Proxmox Host

I've tried running through the entire process multiple times on fresh installs of Proxmox, and always get a similar result.

If it matters at all, here are some basic hardware specs:

Any ideas what might be going on, or what I could check? Thank you so much in advance!

I've been running into a wall with passing through a GPU to my unprivileged LXC container for Jellyfin. I've followed numerous guides, and most recently this great guide on Reddit:

Proxmox GPU passthrough for Jellyfin LXC

Per u/thenickdude's comments, I skipped everything IOMMU related and started at the step:

Add non-free, non-free-firmware and the pve source to the source file.After following the instructions, I get the following error when running

nvidia-smi in my container:NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.I have ensured I've got the same driver version installed in both my host and my container:

Here is the output of various files included in the installation guide:

On Proxmox Host

/etc/apt/sources.list:

Code:

deb http://ftp.de.debian.org/debian bookworm main contrib non-free non-free-firmware

deb http://ftp.de.debian.org/debian bookworm-updates main contrib non-free non-free-firmware

# security updates

deb http://security.debian.org bookworm-security main contrib non-free non-free-firmware

deb http://download.proxmox.com/debian/pve bookworm pve-no-subscriptionOn Proxmox Host

nvidia-smi:

Code:

Sat Apr 27 09:19:44 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.78 Driver Version: 550.78 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1070 Off | 00000000:09:00.0 Off | N/A |

| 0% 52C P5 21W / 230W | 0MiB / 8192MiB | 2% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+On Proxmox Host

ls -l /dev/nvidia*:

Code:

crw-rw-rw- 1 root root 195, 0 Apr 27 09:19 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Apr 27 09:19 /dev/nvidiactl

crw-rw-rw- 1 root root 234, 0 Apr 27 09:19 /dev/nvidia-uvm

crw-rw-rw- 1 root root 234, 1 Apr 27 09:19 /dev/nvidia-uvm-tools

/dev/nvidia-caps:

total 0

cr-------- 1 root root 237, 1 Apr 27 09:19 nvidia-cap1

cr--r--r-- 1 root root 237, 2 Apr 27 09:19 nvidia-cap2On Proxmox Host

/etc/pve/lxc/201.conf:

Code:

arch: amd64

cores: 4

features: nesting=1

hostname: jellyfin

memory: 2048

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=BC:24:11:71:73:D6,ip=dhcp,type=veth

ostype: debian

rootfs: local-lvm:vm-201-disk-0,size=12G

swap: 4096

unprivileged: 1

lxc.cgroup2.devices.allow: c 195:0 rwm

lxc.cgroup2.devices.allow: c 195:255 rwm

lxc.cgroup2.devices.allow: c 234:0 rwm

lxc.cgroup2.devices.allow: c 234:1 rwm

lxc.cgroup2.devices.allow: c 237:1 rwm

lxc.cgroup2.devices.allow: c 237:2 rwm

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap1 dev/nvidia-caps/nvidia-cap1 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap2 dev/nvidia-caps/nvidia-cap2 none bind,optional,create=file

lxc.idmap: u 0 100000 65536

lxc.idmap: g 0 0 1

lxc.idmap: g 1 100000 65536I've tried running through the entire process multiple times on fresh installs of Proxmox, and always get a similar result.

If it matters at all, here are some basic hardware specs:

| CPU | AMD Ryzen 2600 |

| GPU | MSI GTX 1070 |

| RAM | 16GB |

| Motherboard | ASUS Prime B450M-A |

Any ideas what might be going on, or what I could check? Thank you so much in advance!