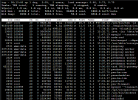

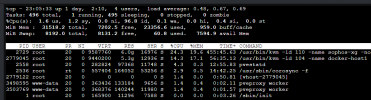

top - 09:44:49 up 248 days, 16:24, 2 users, load average: 3.78, 3.81, 3.76

Tasks: 768 total, 1 running, 767 sleeping, 0 stopped, 0 zombie

%Cpu(s): 6.5 us, 0.1 sy, 0.0 ni, 93.1 id, 0.0 wa, 0.0 hi, 0.2 si, 0.0 st

MiB Mem : 128826.0 total, 2703.7 free, 95415.0 used, 30707.4 buff/cache

MiB Swap: 0.0 total, 0.0 free, 0.0 used. 30980.7 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

9684 root 20 0 17.2g 16.1g 14264 S 209.6 12.8 158751:18 kvm

27332 root 20 0 4853608 4.0g 14324 S 106.0 3.2 71383:20 kvm

39270 root 20 0 2954100 2.1g 13512 S 4.6 1.6 14212:50 kvm

10729 root 20 0 2971308 2.1g 14244 S 0.3 1.6 1413:16 kvm

2613 root 20 0 2677528 828176 5936 S 0.0 0.6 1222:42 glusterfsd

2636 root rt 0 585564 189972 63860 S 1.3 0.1 5149:37 corosync

1432 root 20 0 190672 144608 41864 S 0.0 0.1 27:54.22 systemd-journal

39508 root 20 0 369772 138196 10396 S 0.0 0.1 0:01.84 pvedaemon worke

31250 root 20 0 369508 137452 9872 S 0.0 0.1 0:03.91 pvedaemon worke

12176 www-data 20 0 354644 137068 17624 S 0.0 0.1 1:54.64 pveproxy

2728 www-data 20 0 368412 136760 10612 S 0.0 0.1 0:03.12 pveproxy worker

12430 root 20 0 369528 136636 9140 S 0.0 0.1 0:00.61 pvedaemon worke

19016 www-data 20 0 368064 135852 10000 S 0.0 0.1 0:00.56 pveproxy worker

14891 www-data 20 0 367832 135744 10000 S 0.0 0.1 0:00.69 pveproxy worker

2708 root 20 0 353056 120616 2548 S 0.0 0.1 3:37.77 pvedaemon

2716 root 20 0 337812 101460 7948 S 0.0 0.1 40:13.31 pve-ha-crm

2888 root 20 0 337396 101356 8268 S 0.0 0.1 83:45.84 pve-ha-lrm

2661 root 20 0 304248 89760 8936 S 0.0 0.1 1605:08 pvestatd

2657 root 20 0 305916 89556 7176 S 0.0 0.1 615:00.27 pve-firewall

2567 root 20 0 2407272 72100 52000 S 0.0 0.1 518:03.05 pmxcfs

33189 root 20 0 183896 60348 4524 S 0.0 0.0 0:06.60 chef-client

2886 www-data 20 0 70320 56264 7512 S 0.0 0.0 4:25.34 spiceproxy

6690 www-data 20 0 70568 52232 3260 S 0.0 0.0 0:00.92 spiceproxy work

40382 consul 20 0 182892 25204 6956 S 0.3 0.0 396:40.09 consul

33452 root 20 0 729808 24900 7624 S 0.0 0.0 1441:59 node_exporter

3105 root 20 0 573900 14024 5668 S 0.0 0.0 65:30.70 glusterfs

2235 root 20 0 584376 11184 6028 S 0.0 0.0 32:02.57 glusterd

2655 root 20 0 27000 10224 9092 S 0.0 0.0 9:42.22 corosync-qdevic

2622 root 20 0 811600 10000 4356 S 0.0 0.0 7:18.26 glusterfs

1 root 20 0 171308 8708 5408 S 0.0 0.0 143:01.51 systemd

23795 root 20 0 21400 8156 6568 S 0.0 0.0 0:00.03 systemd

23789 root 20 0 16896 7280 6172 S 0.0 0.0 0:00.01 sshd

40807 postfix 20 0 43832 6556 5692 S 0.0 0.0 0:00.00 pickup

33191 systemd+ 20 0 93080 6404 5480 S 0.0 0.0 0:06.06 systemd-timesyn