Hello!

We have quite large datasets and VM Backups (~16 TB for the biggest VM for example) and do hourly snapshots, the deltas are not that large, but the complete VM has a few 16 TB).

The Problem is now, that garbage collections are taking forever ....

In general the performance of the whole backup server is very slow ...

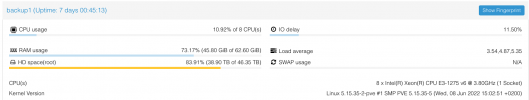

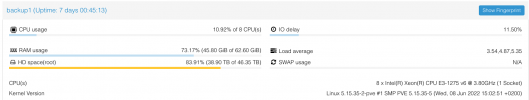

The PBS is running on a dedicated server in a datacenter.

specs:

Datastore:

GC task running for 6 days now ...

Please note: between 07-01 and 07-04, only 1 % was marked, until today, no more progress, the task still is in PHASE1, has not even started phase 2

We are running the latest Backup Server 2.2-3.

We are running 8 PBS servers in total and we see this behaviour on all servers, when the datasets get large, the performance / usability goest to zero ...

Any ideas?

We have quite large datasets and VM Backups (~16 TB for the biggest VM for example) and do hourly snapshots, the deltas are not that large, but the complete VM has a few 16 TB).

The Problem is now, that garbage collections are taking forever ....

In general the performance of the whole backup server is very slow ...

The PBS is running on a dedicated server in a datacenter.

specs:

Datastore:

GC task running for 6 days now ...

Please note: between 07-01 and 07-04, only 1 % was marked, until today, no more progress, the task still is in PHASE1, has not even started phase 2

We are running the latest Backup Server 2.2-3.

We are running 8 PBS servers in total and we see this behaviour on all servers, when the datasets get large, the performance / usability goest to zero ...

Any ideas?