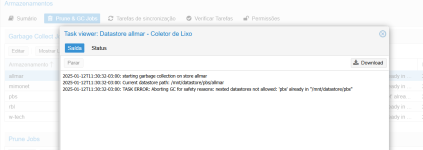

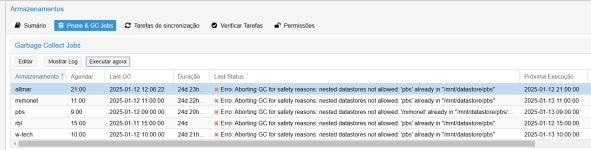

### cat /etc/proxmox-backup/datastore.cfg

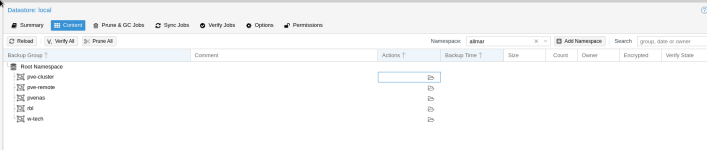

datastore: backuppool

path /mnt/datastore/backuppool

datastore: edv_vm_backups

comment Alle EDV VMs

gc-schedule daily

notify gc=error,sync=error,verify=error

notify-user martin@pbs

path /mnt/datastore/backuppool/edv_vm_backups

datastore: martin_backups

comment Testmaschinen und Privat

gc-schedule daily

notify gc=error,sync=error,verify=error

notify-user martin@pbs

path /mnt/datastore/backuppool/martin_backups

datastore: nc_data_filebackup

comment Nextcloud Daten auf File Ebene

gc-schedule daily

notify gc=error,sync=error,verify=error

notify-user martin@pbs

path /mnt/datastore/backuppool/nc_data_filebackup

datastore: nfs_export

comment

gc-schedule daily

path /mnt/datastore/backuppool/nfs_export

datastore: www_fileadmin

comment fileadmin und mysql www

gc-schedule daily

path /mnt/datastore/backuppool/www_fileadmin