I just wanted to let you know, that online migration of VM to different node, to differently named local storage seems to work perfectly for us. Good work PM team!

Does anyone have any problems with it?

This is a case where I migrated from hdd pool to ssd pool to different node.

Does anyone have any problems with it?

This is a case where I migrated from hdd pool to ssd pool to different node.

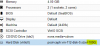

Code:

root@p29:~# qm migrate 100 p30 --online --with-local-disks --targetstorage local-zfs-ssd

2019-02-27 16:21:45 starting migration of VM 100 to node 'p30' (10.31.1.30)

2019-02-27 16:21:45 found local disk 'local-zfs:vm-100-disk-0' (in current VM config)

2019-02-27 16:21:45 copying disk images

2019-02-27 16:21:45 starting VM 100 on remote node 'p30'

2019-02-27 16:21:48 start remote tunnel

2019-02-27 16:21:48 ssh tunnel ver 1

2019-02-27 16:21:48 starting storage migration

2019-02-27 16:21:48 scsi0: start migration to nbd:10.31.1.30:60000:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

drive-scsi0: transferred: 959447040 bytes remaining: 15146680320 bytes total: 16106127360 bytes progression: 5.96 % busy: 1 ready: 0

drive-scsi0: transferred: 2138046464 bytes remaining: 13968080896 bytes total: 16106127360 bytes progression: 13.27 %busy: 1 ready: 0

drive-scsi0: transferred: 3299868672 bytes remaining: 12806258688 bytes total: 16106127360 bytes progression: 20.49 %busy: 1 ready: 0

drive-scsi0: transferred: 4488953856 bytes remaining: 11617173504 bytes total: 16106127360 bytes progression: 27.87 %busy: 1 ready: 0

drive-scsi0: transferred: 5644484608 bytes remaining: 10461642752 bytes total: 16106127360 bytes progression: 35.05 %busy: 1 ready: 0

drive-scsi0: transferred: 6823084032 bytes remaining: 9283043328 bytes total: 16106127360 bytes progression: 42.36 % busy: 1 ready: 0

drive-scsi0: transferred: 7998537728 bytes remaining: 8107589632 bytes total: 16106127360 bytes progression: 49.66 % busy: 1 ready: 0

drive-scsi0: transferred: 9153019904 bytes remaining: 6953107456 bytes total: 16106127360 bytes progression: 56.83 % busy: 1 ready: 0

drive-scsi0: transferred: 10321133568 bytes remaining: 5784993792 bytes total: 16106127360 bytes progression: 64.08 %busy: 1 ready: 0

drive-scsi0: transferred: 11494490112 bytes remaining: 4611637248 bytes total: 16106127360 bytes progression: 71.37 %busy: 1 ready: 0

drive-scsi0: transferred: 12672040960 bytes remaining: 3434086400 bytes total: 16106127360 bytes progression: 78.68 %busy: 1 ready: 0

drive-scsi0: transferred: 13851688960 bytes remaining: 2254569472 bytes total: 16106258432 bytes progression: 86.00 %busy: 1 ready: 0

drive-scsi0: transferred: 15032385536 bytes remaining: 1073872896 bytes total: 16106258432 bytes progression: 93.33 %busy: 1 ready: 0

drive-scsi0: transferred: 16106258432 bytes remaining: 0 bytes total: 16106258432 bytes progression: 100.00 % busy: 0ready: 1

all mirroring jobs are ready

2019-02-27 16:22:11 starting online/live migration on unix:/run/qemu-server/100.migrate

2019-02-27 16:22:11 migrate_set_speed: 8589934592

2019-02-27 16:22:11 migrate_set_downtime: 0.1

2019-02-27 16:22:11 set migration_caps

2019-02-27 16:22:12 set cachesize: 536870912

2019-02-27 16:22:12 start migrate command to unix:/run/qemu-server/100.migrate

2019-02-27 16:22:13 migration status: active (transferred 270943943, remaining 96870400), total 4237107200)

2019-02-27 16:22:13 migration xbzrle cachesize: 536870912 transferred 0 pages 0 cachemiss 0 overflow 0

2019-02-27 16:22:14 migration speed: 154.77 MB/s - downtime 43 ms

2019-02-27 16:22:14 migration status: completed

drive-scsi0: transferred: 16106258432 bytes remaining: 0 bytes total: 16106258432 bytes progression: 100.00 % busy: 0ready: 1

all mirroring jobs are ready

drive-scsi0: Completing block job...

drive-scsi0: Completed successfully.

drive-scsi0 : finished

2019-02-27 16:22:19 migration finished successfully (duration 00:00:34)