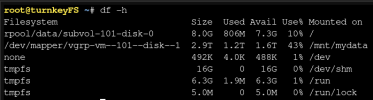

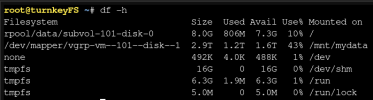

According to the Turnkey File Server I have running on one of my LXCs (ID=101), I am using 1.2T of the total 2.9T capacity of the lvm-thin volume I have mounted.

The GUI, however, shows that I'm using 2.7TB of that volume.

After reading numerous other threads, I ran:

# pct fstrim 101

That command results in the CLI telling me that 1.7TB is cleared, which is the expected amount after I manually deleted files in the .recycle directory using the shell on the LXC.

After the fstrim, the disk usage in the GUI for that volume isn't changed (still showing 2.7TB used). A reboot also does nothing to change the GUI and after a reboot, fstrim clears the 1.7TB again (as if I hadn't done it to begin with).

Any thoughts on whats happening? Did I screw up by manually (with the cli) deleting files in the /mnt/mydata/.recycle directory instead of using fstrim to begin with? If so, how do I fix it?

I've only been using PVE for about a month and the concept of LVM is pretty new to me. I didn't know until today that fstrim existed.

Any help is appreciated and I'm happy to give any outputs that might help diagnose.

Thanks

The GUI, however, shows that I'm using 2.7TB of that volume.

After reading numerous other threads, I ran:

# pct fstrim 101

That command results in the CLI telling me that 1.7TB is cleared, which is the expected amount after I manually deleted files in the .recycle directory using the shell on the LXC.

After the fstrim, the disk usage in the GUI for that volume isn't changed (still showing 2.7TB used). A reboot also does nothing to change the GUI and after a reboot, fstrim clears the 1.7TB again (as if I hadn't done it to begin with).

Any thoughts on whats happening? Did I screw up by manually (with the cli) deleting files in the /mnt/mydata/.recycle directory instead of using fstrim to begin with? If so, how do I fix it?

I've only been using PVE for about a month and the concept of LVM is pretty new to me. I didn't know until today that fstrim existed.

Any help is appreciated and I'm happy to give any outputs that might help diagnose.

Thanks

Last edited: