Daily backups scheduled in PVE to PBS.

pve-manager/7.1-6/4e61e21c (running kernel: 5.13.19-1-pve)

proxmox-backup-server 2.1.4-1 running version: 2.1.4

I've only seen this on disks with btrfs, disk with xfs seems ok for all backups on all vm's.

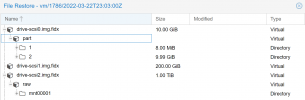

- All is good for all backups on many vm's; "File restore" | expand "drive-scsi1.img.fidx" | expand "raw" and content is listed and download possible.

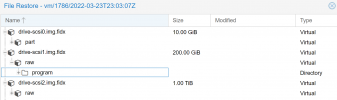

- At least one vm; "File restore", is ok for one backup, not possible to expand disk and list content in other backups.

- At least one vm; "File restore", is not possible to expand disk in any available backups.

This is kind of a show stopper for PBS in our environment.

Solution, or a way to troubleshoot would be appreciated.

/Bengt

pve-manager/7.1-6/4e61e21c (running kernel: 5.13.19-1-pve)

proxmox-backup-server 2.1.4-1 running version: 2.1.4

I've only seen this on disks with btrfs, disk with xfs seems ok for all backups on all vm's.

- All is good for all backups on many vm's; "File restore" | expand "drive-scsi1.img.fidx" | expand "raw" and content is listed and download possible.

- At least one vm; "File restore", is ok for one backup, not possible to expand disk and list content in other backups.

- At least one vm; "File restore", is not possible to expand disk in any available backups.

This is kind of a show stopper for PBS in our environment.

Solution, or a way to troubleshoot would be appreciated.

/Bengt