Hi, First time poster. I'm also working on FC with Proxmox as a PoC to start migrating from VMware in my homelab.

Topology:

I have an ESOS (Enterprise Storage OS) FOSS (essentially running SCST) all-flash SAN consisting of 16 SSDs behind a raid controller that is presented as some LUNs in ESOS. ESOS is connected to my Brocade SAN switch with 3x8 Gb/s ports. Currently have Proxmox on a MiniForum MS-01 server with a FC card (1*4 Gb/s port)

So I have 3 paths currently to the storage.

Before doing anything in Proxmox I passed the PCI HBA to a windows server VM just to upgrade the firmware of the FC card, checked multipath and did a simple speed-test (that was much faster but might not be relevant)

I have installed multi path-tools and have tried create multipath config (this took me aged until I found a hint that Debian needs "find_multipaths yes" - without that I was not able to do anything..

After reading this thread it looks like my output is slightly different and I'm having issues seeing the new "disk" in the GUI.

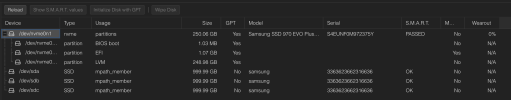

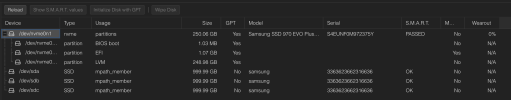

Here are some "debugging" output.

My multipath.conf

The rest

Now the bindings file was empty so I manually added my alias, if that makes sense.

I also mounted the volume and ran dd just to test if I had good connectivity

I also created a PV

Not sure how I can proceed? Any troubleshooting steps I have missed?

Topology:

I have an ESOS (Enterprise Storage OS) FOSS (essentially running SCST) all-flash SAN consisting of 16 SSDs behind a raid controller that is presented as some LUNs in ESOS. ESOS is connected to my Brocade SAN switch with 3x8 Gb/s ports. Currently have Proxmox on a MiniForum MS-01 server with a FC card (1*4 Gb/s port)

So I have 3 paths currently to the storage.

Before doing anything in Proxmox I passed the PCI HBA to a windows server VM just to upgrade the firmware of the FC card, checked multipath and did a simple speed-test (that was much faster but might not be relevant)

I have installed multi path-tools and have tried create multipath config (this took me aged until I found a hint that Debian needs "find_multipaths yes" - without that I was not able to do anything..

After reading this thread it looks like my output is slightly different and I'm having issues seeing the new "disk" in the GUI.

Here are some "debugging" output.

Code:

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

sda mpath_member

└─fc_esos_900_flash ext4 1.0 87f32494-5dfb-44d2-b685-12c1750c354f

sdb mpath_member

└─fc_esos_900_flash ext4 1.0 87f32494-5dfb-44d2-b685-12c1750c354f

sdc mpath_member

└─fc_esos_900_flash ext4 1.0 87f32494-5dfb-44d2-b685-12c1750c354f

Code:

fc_esos_900_flash (23363623662316636) dm-6 SCST_FIO,samsung

size=931G features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=0 status=active

|- 0:0:0:0 sda 8:0 active undef running

|- 0:0:2:0 sdb 8:16 active undef running

`- 0:0:3:0 sdc 8:32 active undef runningMy multipath.conf

Code:

devices {

device {

vendor "SCST_FIO|SCST_BIO"

product "*"

path_selector "round-robin 0"

#path_grouping_policy multibus

path_grouping_policy group_by_prio

rr_min_io 100

}

}

defaults {

find_multipaths yes

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

uid_attribute ID_SERIAL

rr_min_io 100

failback immediate

no_path_retry queue

user_friendly_names yes

}

multipaths {

multipath {

wwid 23363623662316636

alias fc_esos_900_flash

}

}The rest

Code:

/etc/multipath/bindings

# Multipath bindings, Version : 1.0

# NOTE: this file is automatically maintained by the multipath program.

# You should not need to edit this file in normal circumstances.

#

# Format:

# alias wwid

#

fc_esos_900_flash 23363623662316636

etc/multipath/wwids

# Multipath wwids, Version : 1.0

# NOTE: This file is automatically maintained by multipath and multipathd.

# You should not need to edit this file in normal circumstances.

#

# Valid WWIDs:

/23363623662316636/I also mounted the volume and ran dd just to test if I had good connectivity

Code:

dd if=/dev/zero of=/mnt/fc_esos_900_flash/test.img bs=1G count=1 oflag=dsync

1+0 records in

1+0 records out

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 7.58351 s, 142 MB/sI also created a PV

Code:

pvcreate ESOS /dev/mapper/fc_esos_900_flash

No device found for ESOS.

WARNING: ext4 signature detected on /dev/mapper/fc_esos_900_flash at offset 1080. Wipe it? [y/n]: y

Wiping ext4 signature on /dev/mapper/fc_esos_900_flash.

Physical volume "/dev/mapper/fc_esos_900_flash" successfully created.

root@pve01:/mnt# pvdisplay

--- Physical volume ---

PV Name /dev/nvme0n1p3

VG Name pve

PV Size 231.88 GiB / not usable 2.16 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 59362

Free PE 4097

Allocated PE 55265

PV UUID eX6q9w-odRl-8AbX-dRH6-btAi-t5BX-4iZ1F8

"/dev/mapper/fc_esos_900_flash" is a new physical volume of "931.31 GiB"

--- NEW Physical volume ---

PV Name /dev/mapper/fc_esos_900_flash

VG Name

PV Size 931.31 GiB

Allocatable NO

PE Size 0

Total PE 0

Free PE 0

Allocated PE 0

PV UUID 82632d-F78n-OJfo-ue4M-1vkC-JhP1-RcxdY9Not sure how I can proceed? Any troubleshooting steps I have missed?

Last edited: