Hi,

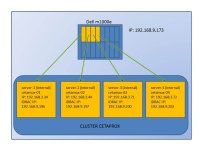

I'll try to put my cluster with HA. I have 4 nodes. I changed cluster.conf and validate. My cluster.conf: http://pastebin.com/BcymtFCx

If I launch fence_drac5: fence_drac5 -m server-1 -l root -p dc4CEYaB -a 192.168.3.44 -o list -x.... it works correctly.

But if I execute "fence_node cetamox-01 -vv", I obtain this:

fence cetamox-01 dev 0.0 agent fence_drac5 result: error from agent

agent args: nodename=cetamox-01 agent=fence_drac5 ipaddr= 192.168.9.173 login=root module_name=server-1 passwd=password secure=1

fence cetamox-01 failed

When cluster is running and I stop the RGManager Service in a node... it works correctly and VW are start on another node. But if I interrupt the power in a node with VM it doesn't works and VM stops.

I have 2 LVM groups like storage elements and are shared correctly.

Have you got any idea about my problem??.

Thanks.

I'll try to put my cluster with HA. I have 4 nodes. I changed cluster.conf and validate. My cluster.conf: http://pastebin.com/BcymtFCx

If I launch fence_drac5: fence_drac5 -m server-1 -l root -p dc4CEYaB -a 192.168.3.44 -o list -x.... it works correctly.

But if I execute "fence_node cetamox-01 -vv", I obtain this:

fence cetamox-01 dev 0.0 agent fence_drac5 result: error from agent

agent args: nodename=cetamox-01 agent=fence_drac5 ipaddr= 192.168.9.173 login=root module_name=server-1 passwd=password secure=1

fence cetamox-01 failed

When cluster is running and I stop the RGManager Service in a node... it works correctly and VW are start on another node. But if I interrupt the power in a node with VM it doesn't works and VM stops.

I have 2 LVM groups like storage elements and are shared correctly.

Have you got any idea about my problem??.

Thanks.

Last edited: