What I'm really missing is a option to set the volblocksize for single zvols. Now it isn't really possible to optimize the storage performance even if ZFS itself would totally allow that.

Lets say I got this scenario:

ZFS Pool: ashift=12, two SSDs in a mirror

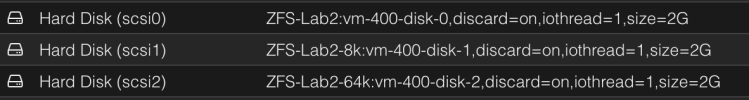

VM: 1st zvol is storing the ext4 root fs and a volblocksize of 4K would be fine so it matches the 4K of the ext4. 2nd zvol is only storing a MySQL DB doing reads/writes as 16K blocks, so I would like to use a 16K volblocksize. 3rd zvol is only storing big CCTV video steams so I would like the volblocksize to be 1M.

This all can be achieved by manually creating the zvols (for example with

But it only works as long as you don't need to restore that VM from backup.

Because right now it works like this:

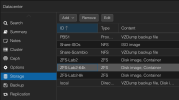

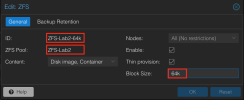

There is only one place where you can setup a blocksize and this is the ZFS storage itself (GUI: Datacenter -> Storage -> YourZFSPool -> Edit -> "Block size" textfield) and everytime a new zvol gets created it will be created with a volblocksize value that is set in that ZFS storages "Block size" textfield. That means you can only set a global volblocksize for the entire ZFS storage and this is especially bad because the volblocksize can only be set at creation and can't be changed later.

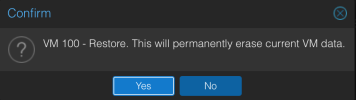

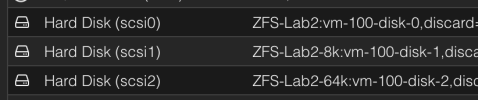

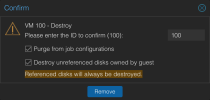

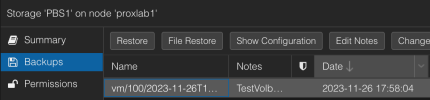

Now lets say I got my custom VM with three zvol with a volblocksize of 4K, 16K and 1M. Then I need to restore it from backup. Restoring a backup will first delete the VM with all its zvols and then create a new VM from scratch using the same VMID based on the data stored in the backup. But all zvols will be newly created and PVE creates them with the volblocksize set for the entire ZFS storage. Lets say the ZFS storages "Block Size" is set to the default 8K, so my restored VM will now use a 8k volblocksize for all 3 disks no matter what volblocksize was used previously.

What I would like to see is a global ZFS storage blocksize you can set at the ZFS storage like it is now but that this will only be used if not overwritten by the VMs config. Then I would like to see a new "block size" field when creating a new virtual Disk for a VM and this value should be stored in the VMs config file like this:

Each time PVE needs to create a new zvol (adding another disk to a VM or doing a migration or restoring a backup) it could then first look at the VMs config file to see if a blocksize for the virtual disk is defined there. If it is, it will create that zvol with the value defined there as the volblocksize. If it is not defined there it will use the global ZFS storages blocksize as a fallback.

That way nothing would change if you don't care about the volblocksize, as you can continue using the global blocksize defined by the ZFS storage. But if you care about it you could overwrite it for specific virtual disks.

One workaround for me was to create several datasets on the same pool and adding each dataset as its own ZFS storage. Lets say I got a "ZFS_4k", a "ZFS_16k" and a "ZFS_1M" ZFS storage. I could then give each ZFS storage its own blocksize and I could define what volblocksize to use for each Zvol by storing it on the right ZFS storage.

But this also got two downsides:

1.) you get alot of ZFS storages if you need alot of different block sizes

2.) when restoring a VM you can only select a single storage where all virtual disks will be restored to. With the example above I then would need my three zvols of that VM to be on three different ZFS storages. After a restore all three zvols would be stored on the same ZFS storage, so I would need to move two of them after that causing additional downtime and SSD wear.

Someone elso would like to see this feature?

How hard would such a feature be to implement? Looks easy to me for ZFS but maybe it might be problematic because other storages would need to be supported too?

Should I create a feature request in the bug tracker or is there already a similar feature request?

Lets say I got this scenario:

ZFS Pool: ashift=12, two SSDs in a mirror

VM: 1st zvol is storing the ext4 root fs and a volblocksize of 4K would be fine so it matches the 4K of the ext4. 2nd zvol is only storing a MySQL DB doing reads/writes as 16K blocks, so I would like to use a 16K volblocksize. 3rd zvol is only storing big CCTV video steams so I would like the volblocksize to be 1M.

This all can be achieved by manually creating the zvols (for example with

zfs create -V 32gb -o volblocksize=16k rpool/vm-100-disk-1) and then using qm rescan 100 and attaching the manually created zvols to the VM with VMID 100. Its a bit annoying to do it that way but it works.But it only works as long as you don't need to restore that VM from backup.

Because right now it works like this:

There is only one place where you can setup a blocksize and this is the ZFS storage itself (GUI: Datacenter -> Storage -> YourZFSPool -> Edit -> "Block size" textfield) and everytime a new zvol gets created it will be created with a volblocksize value that is set in that ZFS storages "Block size" textfield. That means you can only set a global volblocksize for the entire ZFS storage and this is especially bad because the volblocksize can only be set at creation and can't be changed later.

Now lets say I got my custom VM with three zvol with a volblocksize of 4K, 16K and 1M. Then I need to restore it from backup. Restoring a backup will first delete the VM with all its zvols and then create a new VM from scratch using the same VMID based on the data stored in the backup. But all zvols will be newly created and PVE creates them with the volblocksize set for the entire ZFS storage. Lets say the ZFS storages "Block Size" is set to the default 8K, so my restored VM will now use a 8k volblocksize for all 3 disks no matter what volblocksize was used previously.

What I would like to see is a global ZFS storage blocksize you can set at the ZFS storage like it is now but that this will only be used if not overwritten by the VMs config. Then I would like to see a new "block size" field when creating a new virtual Disk for a VM and this value should be stored in the VMs config file like this:

scsi1: MyZFSPool:vm-100-disk-0,cache=none,size=32G,blocksize=16kEach time PVE needs to create a new zvol (adding another disk to a VM or doing a migration or restoring a backup) it could then first look at the VMs config file to see if a blocksize for the virtual disk is defined there. If it is, it will create that zvol with the value defined there as the volblocksize. If it is not defined there it will use the global ZFS storages blocksize as a fallback.

That way nothing would change if you don't care about the volblocksize, as you can continue using the global blocksize defined by the ZFS storage. But if you care about it you could overwrite it for specific virtual disks.

One workaround for me was to create several datasets on the same pool and adding each dataset as its own ZFS storage. Lets say I got a "ZFS_4k", a "ZFS_16k" and a "ZFS_1M" ZFS storage. I could then give each ZFS storage its own blocksize and I could define what volblocksize to use for each Zvol by storing it on the right ZFS storage.

But this also got two downsides:

1.) you get alot of ZFS storages if you need alot of different block sizes

2.) when restoring a VM you can only select a single storage where all virtual disks will be restored to. With the example above I then would need my three zvols of that VM to be on three different ZFS storages. After a restore all three zvols would be stored on the same ZFS storage, so I would need to move two of them after that causing additional downtime and SSD wear.

Someone elso would like to see this feature?

How hard would such a feature be to implement? Looks easy to me for ZFS but maybe it might be problematic because other storages would need to be supported too?

Should I create a feature request in the bug tracker or is there already a similar feature request?

Last edited: