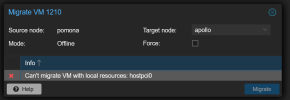

I can understand why there might well be a reason to not allow migration of a VM if the target host might not support the same PCI Device pass-through entries, but it should at least be an option you can click saying you understand the implications, or be able to indicate that a target node is in the same logical group and it's understood that the capabilities are exactly the same.

I have 5 identical nodes within the cluster, all having GPUs installed on the same slot and thus have exactly the same PCIExpress entries and mediated devices (mdevs).

It's annoying to have to remove the PCI device entry, migrate the VM, only to then re-add the exact same entry on the new host.

Surely I can't be the only one to have a relatively homogenous cluster and want to do this?

I have 5 identical nodes within the cluster, all having GPUs installed on the same slot and thus have exactly the same PCIExpress entries and mediated devices (mdevs).

It's annoying to have to remove the PCI device entry, migrate the VM, only to then re-add the exact same entry on the new host.

Surely I can't be the only one to have a relatively homogenous cluster and want to do this?