When I tried to start a LXC, syslog showed:

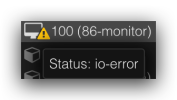

Then I tried to start a VE, and a warning icon replaced the green check icon, said io-error.

Manager Version:

Kernel Version:

Bash:

kernel: loop0: detected capacity change from 0 to 20971520

kernel: EXT4-fs warning (device loop0): ext4_multi_mount_protect:328: MMP interval 42 higher than expected, please wait.

CRON[21319]: pam_unix(cron:session): session closed for user root

kernel: loop: Write error at byte offset 37916672, length 4096.

kernel: I/O error, dev loop0, sector 74056 op 0x1:(WRITE) flags 0x3800 phys_seg 1 prio class 2

kernel: Buffer I/O error on dev loop0, logical block 9257, lost sync page write

pvestatd[1069]: unable to get PID for CT 104 (not running?)

pvedaemon[18764]: unable to get PID for CT 104 (not running?)

pvestatd[1069]: status update time (15.457 seconds)Then I tried to start a VE, and a warning icon replaced the green check icon, said io-error.

Manager Version:

pve-manager/8.1.3/b46aac3b42da5d15Kernel Version:

Linux 6.5.11-4-pve (2023-11-20T10:19Z)