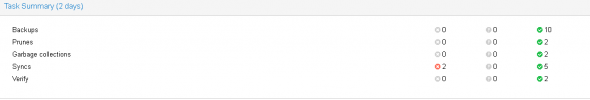

Here is a typical error for a sync task

()

Task viewer: Sync Job PBS-:Filer1-WIN

S_HDD:s-02f0435e-75fd - Remote Sync

OutputStatus

Stop

......

2022-03-14T06:02:45+01:00: re-sync snapshot "vm/1014/2022-03-09T08:00:02Z" done

2022-03-14T06:02:45+01:00: percentage done: 29.17% (14/48 groups)

2022-03-14T06:02:45+01:00: skipped: 17 snapshot(s) (2021-11-05T14:49:16Z .. 2022-03-02T08:00:02Z) older than the newest local snapshot

2022-03-14T06:02:46+01:00: percentage done: 31.25% (15/48 groups)

2022-03-14T06:02:46+01:00: sync group vm/1015 failed - unable to acquire lock on snapshot directory "/mnt/datastore/DS_HDD/vm/1015/2022-03-13T06:15:49Z" - internal error - tried creating snapshot that's already in use

2022-03-14T06:02:46+01:00: re-sync snapshot "vm/1016/2022-03-13T20:39:30Z"

2022-03-14T06:02:46+01:00: no data changes

2022-03-14T06:02:46+01:00: re-sync snapshot "vm/1016/2022-03-13T20:39:30Z" done

2022-03-14T06:02:46+01:00: percentage done: 33.33% (16/48 groups)

2022-03-14T06:02:46+01:00: skipped: 20 snapshot(s) (2021-11-28T20:05:37Z .. 2022-03-12T20:54:36Z) older than the newest local snapshot

2022-03-14T06:02:46+01:00: re-sync snapshot "vm/1018/2022-03-13T06:41:37Z"

2022-03-14T06:02:46+01:00: no data changes

2022-03-14T06:02:46+01:00: re-sync snapshot "vm/1018/2022-03-13T06:41:37Z" done

2022-03-14T06:02:46+01:00: percentage done: 35.42% (17/48 groups)

2022-03-14T06:02:46+01:00: skipped: 20 snapshot(s) (2021-11-28T06:27:18Z .. 2022-03-12T06:35:10Z) older than the newest local snapshot

2022-03-14T06:02:47+01:00: re-sync snapshot "vm/1019/2022-03-13T06:52:56Z"

2022-03-14T06:02:47+01:00: no data changes

2022-03-14T06:02:47+01:00: re-sync snapshot "vm/1019/2022-03-13T06:52:56Z" done

2022-03-14T06:02:47+01:00: percentage done: 37.50% (18/48 groups)

2022-03-14T06:02:47+01:00: skipped: 20 snapshot(s) (2021-11-28T06:38:39Z .. 2022-03-12T06:46:32Z) older than the newest local snapshot

2022-03-14T06:02:47+01:00: re-sync snapshot "vm/1020/2022-02-01T20:40:03Z"

2022-03-14T06:02:47+01:00: no data changes

2022-03-14T06:02:47+01:00: re-sync snapshot "vm/1020/2022-02-01T20:40:03Z" done

2022-03-14T06:02:47+01:00: percentage done: 39.58% (19/48 groups)

2022-03-14T06:02:47+01:00: skipped: 19 snapshot(s) (2021-11-28T20:05:00Z .. 2022-01-31T20:27:39Z) older than the newest local snapshot

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2000/2022-03-09T08:08:49Z"

2022-03-14T06:02:48+01:00: no data changes

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2000/2022-03-09T08:08:49Z" done

2022-03-14T06:02:48+01:00: percentage done: 41.67% (20/48 groups)

2022-03-14T06:02:48+01:00: skipped: 17 snapshot(s) (2021-11-05T14:49:16Z .. 2022-03-02T08:00:02Z) older than the newest local snapshot

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2001/2022-03-13T10:02:36Z"

2022-03-14T06:02:48+01:00: no data changes

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2001/2022-03-13T10:02:36Z" done

2022-03-14T06:02:48+01:00: percentage done: 43.75% (21/48 groups)

2022-03-14T06:02:48+01:00: skipped: 19 snapshot(s) (2021-12-26T10:07:45Z .. 2022-03-11T10:02:38Z) older than the newest local snapshot

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2002/2022-03-09T08:10:55Z"

2022-03-14T06:02:48+01:00: no data changes

2022-03-14T06:02:48+01:00: re-sync snapshot "vm/2002/2022-03-09T08:10:55Z" done

2022-03-14T06:02:48+01:00: percentage done: 45.83% (22/48 groups)

2022-03-14T06:02:48+01:00: skipped: 16 snapshot(s) (2021-11-05T14:49:16Z .. 2022-03-02T08:01:38Z) older than the newest local snapshot

2022-03-14T06:02:49+01:00: re-sync snapshot "vm/2003/2022-03-13T10:18:15Z"

2022-03-14T06:02:49+01:00: no data changes

2022-03-14T06:02:49+01:00: re-sync snapshot "vm/2003/2022-03-13T10:18:15Z" done

2022-03-14T06:02:49+01:00: percentage done: 47.92% (23/48 groups)

2022-03-14T06:02:49+01:00: skipped: 15 snapshot(s) (2022-02-20T10:20:55Z .. 2022-03-11T10:18:20Z) older than the newest local snapshot

2022-03-14T06:02:49+01:00: re-sync snapshot "vm/2004/2022-03-13T10:20:22Z"

2022-03-14T06:02:49+01:00: no data changes

2022-03-14T06:02:49+01:00: re-sync snapshot "vm/2004/2022-03-13T10:20:22Z" done

2022-03-14T06:02:49+01:00: percentage done: 50.00% (24/48 groups)

2022-03-14T06:02:49+01:00: skipped: 15 snapshot(s) (2022-02-20T10:22:32Z .. 2022-03-11T10:20:27Z) older than the newest local snapshot

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/2501/2022-03-13T17:16:49Z"

2022-03-14T06:02:50+01:00: no data changes

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/2501/2022-03-13T17:16:49Z" done

2022-03-14T06:02:50+01:00: percentage done: 52.08% (25/48 groups)

2022-03-14T06:02:50+01:00: skipped: 17 snapshot(s) (2022-02-06T17:07:55Z .. 2022-03-12T17:16:00Z) older than the newest local snapshot

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/2502/2022-03-13T17:17:10Z"

2022-03-14T06:02:50+01:00: no data changes

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/2502/2022-03-13T17:17:10Z" done

2022-03-14T06:02:50+01:00: percentage done: 54.17% (26/48 groups)

2022-03-14T06:02:50+01:00: skipped: 17 snapshot(s) (2022-02-06T17:08:17Z .. 2022-03-12T17:16:20Z) older than the newest local snapshot

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/500/2022-03-13T10:00:02Z"

2022-03-14T06:02:50+01:00: no data changes

2022-03-14T06:02:50+01:00: re-sync snapshot "vm/500/2022-03-13T10:00:02Z" done

2022-03-14T06:02:50+01:00: percentage done: 56.25% (27/48 groups)

2022-03-14T06:02:50+01:00: skipped: 20 snapshot(s) (2021-11-28T10:00:02Z .. 2022-03-11T10:00:02Z) older than the newest local snapshot

2022-03-14T06:02:51+01:00: re-sync snapshot "vm/501/2022-03-13T10:06:18Z"

2022-03-14T06:02:51+01:00: no data changes

2022-03-14T06:02:51+01:00: re-sync snapshot "vm/501/2022-03-13T10:06:18Z" done

2022-03-14T06:02:51+01:00: percentage done: 58.33% (28/48 groups)

2022-03-14T06:02:51+01:00: skipped: 18 snapshot(s) (2022-01-30T10:08:22Z .. 2022-03-11T10:06:22Z) older than the newest local snapshot

2022-03-14T06:02:51+01:00: re-sync snapshot "vm/502/2022-03-13T10:06:23Z"

2022-03-14T06:02:51+01:00: no data changes

2022-03-14T06:02:51+01:00: re-sync snapshot "vm/502/2022-03-13T10:06:23Z" done

2022-03-14T06:02:51+01:00: percentage done: 60.42% (29/48 groups)

2022-03-14T06:02:51+01:00: skipped: 20 snapshot(s) (2021-11-28T10:06:19Z .. 2022-03-11T10:06:28Z) older than the newest local snapshot

2022-03-14T06:02:52+01:00: re-sync snapshot "vm/503/2022-03-13T17:00:08Z"

2022-03-14T06:02:52+01:00: no data changes

2022-03-14T06:02:52+01:00: re-sync snapshot "vm/503/2022-03-13T17:00:08Z" done

2022-03-14T06:02:52+01:00: percentage done: 62.50% (30/48 groups)

2022-03-14T06:02:52+01:00: skipped: 18 snapshot(s) (2022-01-30T17:00:07Z .. 2022-03-12T17:00:06Z) older than the newest local snapshot

2022-03-14T06:02:52+01:00: re-sync snapshot "vm/504/2022-03-13T10:00:02Z"

2022-03-14T06:02:52+01:00: re-sync snapshot "vm/504/2022-03-13T10:00:02Z" done

2022-03-14T06:02:52+01:00: percentage done: 64.58% (31/48 groups)

2022-03-14T06:02:52+01:00: skipped: 19 snapshot(s) (2021-11-24T18:00:02Z .. 2022-03-11T10:00:02Z) older than the newest local snapshot

2022-03-14T06:02:53+01:00: re-sync snapshot "vm/505/2022-03-13T09:00:01Z"

2022-03-14T06:02:53+01:00: re-sync snapshot "vm/505/2022-03-13T09:00:01Z" done

2022-03-14T06:02:53+01:00: percentage done: 66.67% (32/48 groups)

2022-03-14T06:02:53+01:00: skipped: 21 snapshot(s) (2021-10-31T09:00:02Z .. 2022-03-12T09:00:02Z) older than the newest local snapshot

2022-03-14T06:02:53+01:00: re-sync snapshot "vm/506/2022-03-13T10:00:09Z"

2022-03-14T06:02:53+01:00: re-sync snapshot "vm/506/2022-03-13T10:00:09Z" done

2022-03-14T06:02:53+01:00: percentage done: 68.75% (33/48 groups)

2022-03-14T06:02:53+01:00: skipped: 20 snapshot(s) (2021-11-28T10:00:02Z .. 2022-03-11T10:00:13Z) older than the newest local snapshot

2022-03-14T06:02:54+01:00: re-sync snapshot "vm/507/2022-03-13T00:30:08Z"

2022-03-14T06:02:54+01:00: re-sync snapshot "vm/507/2022-03-13T00:30:08Z" done

2022-03-14T06:02:54+01:00: percentage done: 70.74% (33/48 groups, 21/22 snapshots in group #34)

2022-03-14T06:02:54+01:00: sync snapshot "vm/507/2022-03-14T00:30:05Z"

2022-03-14T06:02:54+01:00: sync archive qemu-server.conf.blob

2022-03-14T06:02:55+01:00: sync archive drive-scsi1.img.fidx

2022-03-14T06:11:39+01:00: downloaded 5894055751 bytes (10.74 MiB/s)

2022-03-14T06:11:39+01:00: sync archive drive-scsi0.img.fidx

2022-03-14T06:11:44+01:00: downloaded 51483852 bytes (10.68 MiB/s)

2022-03-14T06:11:44+01:00: got backup log file "client.log.blob"

2022-03-14T06:11:44+01:00: sync snapshot "vm/507/2022-03-14T00:30:05Z" done

2022-03-14T06:11:44+01:00: percentage done: 70.83% (34/48 groups)

2022-03-14T06:11:44+01:00: skipped: 20 snapshot(s) (2021-11-28T00:30:01Z .. 2022-03-12T00:30:05Z) older than the newest local snapshot

2022-03-14T06:11:44+01:00: re-sync snapshot "vm/508/2022-03-13T10:07:43Z"

2022-03-14T06:11:45+01:00: re-sync snapshot "vm/508/2022-03-13T10:07:43Z" done

2022-03-14T06:11:45+01:00: percentage done: 72.92% (35/48 groups)

2022-03-14T06:11:45+01:00: skipped: 20 snapshot(s) (2021-11-28T10:07:41Z .. 2022-03-11T10:07:49Z) older than the newest local snapshot

2022-03-14T06:11:45+01:00: re-sync snapshot "vm/509/2022-03-13T10:00:14Z"

2022-03-14T06:11:45+01:00: re-sync snapshot "vm/509/2022-03-13T10:00:14Z" done

2022-03-14T06:11:45+01:00: percentage done: 75.00% (36/48 groups)

2022-03-14T06:11:45+01:00: skipped: 16 snapshot(s) (2022-02-13T10:00:14Z .. 2022-03-11T10:00:17Z) older than the newest local snapshot

2022-03-14T06:11:45+01:00: re-sync snapshot "vm/510/2022-03-13T10:08:00Z"

2022-03-14T06:11:45+01:00: re-sync snapshot "vm/510/2022-03-13T10:08:00Z" done

2022-03-14T06:11:45+01:00: percentage done: 77.08% (37/48 groups)

2022-03-14T06:11:45+01:00: skipped: 19 snapshot(s) (2021-11-28T10:07:45Z .. 2022-03-11T10:07:57Z) older than the newest local snapshot

2022-03-14T06:11:46+01:00: re-sync snapshot "vm/511/2022-03-13T10:00:21Z"

2022-03-14T06:11:46+01:00: re-sync snapshot "vm/511/2022-03-13T10:00:21Z" done

2022-03-14T06:11:46+01:00: percentage done: 79.17% (38/48 groups)

2022-03-14T06:11:46+01:00: skipped: 20 snapshot(s) (2021-11-28T10:06:43Z .. 2022-03-11T10:00:22Z) older than the newest local snapshot

2022-03-14T06:11:46+01:00: re-sync snapshot "vm/512/2022-03-13T17:00:30Z"

2022-03-14T06:11:46+01:00: re-sync snapshot "vm/512/2022-03-13T17:00:30Z" done

2022-03-14T06:11:46+01:00: percentage done: 81.25% (39/48 groups)

2022-03-14T06:11:46+01:00: skipped: 8 snapshot(s) (2021-11-25T18:00:02Z .. 2022-03-12T17:00:27Z) older than the newest local snapshot

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/513/2022-03-13T10:00:22Z"

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/513/2022-03-13T10:00:22Z" done

2022-03-14T06:11:47+01:00: percentage done: 83.33% (40/48 groups)

2022-03-14T06:11:47+01:00: skipped: 18 snapshot(s) (2021-12-26T18:00:02Z .. 2022-03-11T10:00:23Z) older than the newest local snapshot

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/514/2022-03-13T17:00:36Z"

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/514/2022-03-13T17:00:36Z" done

2022-03-14T06:11:47+01:00: percentage done: 85.42% (41/48 groups)

2022-03-14T06:11:47+01:00: skipped: 19 snapshot(s) (2021-12-26T18:00:12Z .. 2022-03-12T17:00:32Z) older than the newest local snapshot

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/515/2022-01-02T18:00:27Z"

2022-03-14T06:11:47+01:00: no data changes

2022-03-14T06:11:47+01:00: re-sync snapshot "vm/515/2022-01-02T18:00:27Z" done

2022-03-14T06:11:47+01:00: percentage done: 87.50% (42/48 groups)

2022-03-14T06:11:47+01:00: skipped: 16 snapshot(s) (2021-12-05T18:00:22Z .. 2022-01-01T18:03:45Z) older than the newest local snapshot

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/516/2022-01-13T17:02:09Z"

2022-03-14T06:11:48+01:00: no data changes

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/516/2022-01-13T17:02:09Z" done

2022-03-14T06:11:48+01:00: percentage done: 89.58% (43/48 groups)

2022-03-14T06:11:48+01:00: skipped: 17 snapshot(s) (2021-12-10T18:00:02Z .. 2022-01-12T17:02:19Z) older than the newest local snapshot

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/517/2022-01-13T17:22:49Z"

2022-03-14T06:11:48+01:00: no data changes

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/517/2022-01-13T17:22:49Z" done

2022-03-14T06:11:48+01:00: percentage done: 91.67% (44/48 groups)

2022-03-14T06:11:48+01:00: skipped: 17 snapshot(s) (2021-12-10T18:00:07Z .. 2022-01-12T17:20:04Z) older than the newest local snapshot

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/518/2022-01-19T17:02:24Z"

2022-03-14T06:11:48+01:00: no data changes

2022-03-14T06:11:48+01:00: re-sync snapshot "vm/518/2022-01-19T17:02:24Z" done

2022-03-14T06:11:48+01:00: percentage done: 93.75% (45/48 groups)

2022-03-14T06:11:48+01:00: skipped: 17 snapshot(s) (2021-12-19T18:00:01Z .. 2022-01-18T17:01:39Z) older than the newest local snapshot

2022-03-14T06:11:49+01:00: re-sync snapshot "vm/519/2022-01-19T17:18:19Z"

2022-03-14T06:11:49+01:00: no data changes

2022-03-14T06:11:49+01:00: re-sync snapshot "vm/519/2022-01-19T17:18:19Z" done

2022-03-14T06:11:49+01:00: percentage done: 95.83% (46/48 groups)

2022-03-14T06:11:49+01:00: skipped: 17 snapshot(s) (2021-12-19T18:00:05Z .. 2022-01-18T17:14:10Z) older than the newest local snapshot

2022-03-14T06:11:49+01:00: re-sync snapshot "vm/520/2022-01-25T17:09:06Z"

2022-03-14T06:11:49+01:00: no data changes

2022-03-14T06:11:49+01:00: re-sync snapshot "vm/520/2022-01-25T17:09:06Z" done

2022-03-14T06:11:49+01:00: percentage done: 97.92% (47/48 groups)

2022-03-14T06:11:49+01:00: skipped: 17 snapshot(s) (2021-12-26T18:00:24Z .. 2022-01-24T17:02:33Z) older than the newest local snapshot

2022-03-14T06:11:50+01:00: re-sync snapshot "vm/521/2022-02-27T17:05:15Z"

2022-03-14T06:11:50+01:00: no data changes

2022-03-14T06:11:50+01:00: re-sync snapshot "vm/521/2022-02-27T17:05:15Z" done

2022-03-14T06:11:50+01:00: percentage done: 100.00% (48/48 groups)

2022-03-14T06:11:50+01:00: skipped: 18 snapshot(s) (2021-12-26T18:00:28Z .. 2022-02-26T17:02:55Z) older than the newest local snapshot

2022-03-14T06:11:50+01:00: TASK ERROR: sync failed with some errors.