Hi,

We're trying to create a cluster of Proxmox nodes directly connected to Internet (public IP, inexpensive servers provided by OVH). No LAN.

We have 2 nodes atm, one in France (n1) and one in Germany (n2).

We're inexperienced with SDN.

Our objectives are :

- put all VMs on the same VNet / Subnet on any node

- VMs should be able to reach all other VMs on the same VNet regardless of which node they are actually located.

- VMs should be able to access the Internet, and be reachable from the Internet if we set the right forwarding rules.

- Encrypted traffic between nodes

We have disabled all firewalls, at the datacenter level and node levels.

Ports 179/TCP and 4789/UDP can be reached from n1 to n2 and vice versa. Can talk with bgpd on remote nodes with netcat.

1/ VXLAN

We have already successfully established a VXLAN that span accross both nodes. VMs can all ping each other, great. VXLAN traffic between nodes is encrypted using strongswan (https://pve.proxmox.com/pve-docs/chapter-pvesdn.html#_vxlan_ipsec_encryption)

But we have failed to let them reach other networks / Internet. We could not figure out how to define a gateway and let anything exit or enter the VXLAN... Could someone point us to some helpful resources?

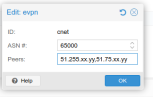

2/ EVPN

The possibility to manage different VXLAN sounds interesting; we also tried with EVPN.

It seems to be very straightforward in a "layer 2" environment. But we can't get it to work in our case (through Internet / public networks).

Good:

- VMs can reach other VMs on the same node

- VMs can reach the internet

Issues:

- VMs on one node cannot reach VMs on the other node

- It seems that no traffic transits between nodes

- We don't understand how bgp works in this context, and can't see anything happening (vtxsh# show bgp summary shows that the BGP connexions remains in "Active" state with no information being exchanged between nodes)

Configuration on both nodes follows.

Thanks in advance.

We're trying to create a cluster of Proxmox nodes directly connected to Internet (public IP, inexpensive servers provided by OVH). No LAN.

We have 2 nodes atm, one in France (n1) and one in Germany (n2).

We're inexperienced with SDN.

Our objectives are :

- put all VMs on the same VNet / Subnet on any node

- VMs should be able to reach all other VMs on the same VNet regardless of which node they are actually located.

- VMs should be able to access the Internet, and be reachable from the Internet if we set the right forwarding rules.

- Encrypted traffic between nodes

We have disabled all firewalls, at the datacenter level and node levels.

Ports 179/TCP and 4789/UDP can be reached from n1 to n2 and vice versa. Can talk with bgpd on remote nodes with netcat.

1/ VXLAN

We have already successfully established a VXLAN that span accross both nodes. VMs can all ping each other, great. VXLAN traffic between nodes is encrypted using strongswan (https://pve.proxmox.com/pve-docs/chapter-pvesdn.html#_vxlan_ipsec_encryption)

But we have failed to let them reach other networks / Internet. We could not figure out how to define a gateway and let anything exit or enter the VXLAN... Could someone point us to some helpful resources?

2/ EVPN

The possibility to manage different VXLAN sounds interesting; we also tried with EVPN.

It seems to be very straightforward in a "layer 2" environment. But we can't get it to work in our case (through Internet / public networks).

Good:

- VMs can reach other VMs on the same node

- VMs can reach the internet

Issues:

- VMs on one node cannot reach VMs on the other node

- It seems that no traffic transits between nodes

- We don't understand how bgp works in this context, and can't see anything happening (vtxsh# show bgp summary shows that the BGP connexions remains in "Active" state with no information being exchanged between nodes)

Configuration on both nodes follows.

Thanks in advance.