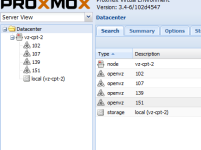

Everything greyed out. any reason why?

- Thread starter sahostking

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Looks like you lost quorum, what does

# pvecm status

out put.

Did you change something in the cluster config? What do the logs say about corosync?

What do you mean with "I tweaked a few things", with more details we can try to help better

# pvecm status

out put.

Did you change something in the cluster config? What do the logs say about corosync?

What do you mean with "I tweaked a few things", with more details we can try to help better

Don't have a cluster yet. This is just a test server.

But should it be running?

cman_tool: Cannot open connection to cman, is it running ?

Tried starting it through GUI just stops again.

is what I get.

I've been busy with backups and the storage section trying ISCI targets, NFS and ZFS types.

But should it be running?

cman_tool: Cannot open connection to cman, is it running ?

Tried starting it through GUI just stops again.

is what I get.

I've been busy with backups and the storage section trying ISCI targets, NFS and ZFS types.

No, on an one node cluster it's actually OK that it isn't running, sorry.

Hmm, that should not trigger such a behaviour. Did you already restart the node, or at least "pve-cluster" and "pve-manager" services through:

# service pve-cluster restart

# service pve-manager restart

What does the (sys)logs say?

btw.: If it's a test server and your building your setup it may be wise to switch to PVE 4.0, various bug fixes and some other nice stuff awaits you.

Hmm, that should not trigger such a behaviour. Did you already restart the node, or at least "pve-cluster" and "pve-manager" services through:

# service pve-cluster restart

# service pve-manager restart

What does the (sys)logs say?

btw.: If it's a test server and your building your setup it may be wise to switch to PVE 4.0, various bug fixes and some other nice stuff awaits you.

Decided to reboot server which fixed it. Not ideal I know but don't have time to troubleshoot that particular issue.

I did use version 4 before but because of this bug "http://forum.proxmox.com/threads/23805-ZFS-LXC-issue-can-t-select-the-ZFS-storage-for-a-container" I went for version 3.4 so long to test it on there.

I did use version 4 before but because of this bug "http://forum.proxmox.com/threads/23805-ZFS-LXC-issue-can-t-select-the-ZFS-storage-for-a-container" I went for version 3.4 so long to test it on there.

Hi,

I have the same issue as OP. Here is my experience, hope this can be resolved soon, because its highly frustrating.

I was running proxmox 4.4, and wanted to update to version 5.3 (single node, no cluster). Doing an 'apt dist-upgrade' (and fixing some issues on the way), I ended up with proxmox 5.3.

My VM's and containers were running fine, until I restated a container. After that, all the symbols were grayed out, but nothing was down with the exception of the container, that I tried to restart. That container was not available anymore. Only a reboot of the node solved that issue.

So I decided to do a fresh install of proxmox 5.3. I reinstalled proxmox on my 2x SSD;s in mirror (zfs). I had my vms and containers on a separate pool. I important that pool and all config files. I was back in business.

After about 3 days, the same issue happened again. Trying to restart a container from within the container, and the container is not available anymore, and all symbols in the GUI are grayed out and the GUI is not usable.

This was causing to much frustration, so I did another fresh install, but installed proxmox4.4. 4.4 was the last stable version I used that did not cause any issues what-so-ever. To my surprise, I am having the same issue with proxmox 4.4.

When I try to start a failed container, I get

Only thing that fixes this issue is to restart the node.

pveversion -v

So I am unsure, why 4.4 is behaving this way, since I was on 4.4 before (and I had all updates installed, etc.). I hope someone smarter than I am has an idea on how to solve this issue.

Thank you all.

Cheers,

Eddi

I have the same issue as OP. Here is my experience, hope this can be resolved soon, because its highly frustrating.

I was running proxmox 4.4, and wanted to update to version 5.3 (single node, no cluster). Doing an 'apt dist-upgrade' (and fixing some issues on the way), I ended up with proxmox 5.3.

My VM's and containers were running fine, until I restated a container. After that, all the symbols were grayed out, but nothing was down with the exception of the container, that I tried to restart. That container was not available anymore. Only a reboot of the node solved that issue.

So I decided to do a fresh install of proxmox 5.3. I reinstalled proxmox on my 2x SSD;s in mirror (zfs). I had my vms and containers on a separate pool. I important that pool and all config files. I was back in business.

After about 3 days, the same issue happened again. Trying to restart a container from within the container, and the container is not available anymore, and all symbols in the GUI are grayed out and the GUI is not usable.

This was causing to much frustration, so I did another fresh install, but installed proxmox4.4. 4.4 was the last stable version I used that did not cause any issues what-so-ever. To my surprise, I am having the same issue with proxmox 4.4.

When I try to start a failed container, I get

Code:

lxc-start: tools/lxc_start.c: main: 365 The container failed to start.

lxc-start: tools/lxc_start.c: main: 367 To get more details, run the container in foreground mode.

lxc-start: tools/lxc_start.c: main: 369 Additional information can be obtained by setting the --logfile and --logpriority options.

TASK ERROR: command 'lxc-start -n 905' failed: exit code 1pveversion -v

Code:

root@VMNode01:~# pveversion -v

proxmox-ve: 5.3-1 (running kernel: 4.15.18-9-pve)

pve-manager: 4.4-1 (running version: 4.4-1/eb2d6f1e)

pve-kernel-4.15.18-9-pve: 4.15.18-30

pve-kernel-4.4.35-1-pve: 4.4.35-76

pve-kernel-4.15: 5.2-12

lvm2: 2.02.116-pve3

corosync-pve: 2.4.0-1

libqb0: 1.0.3-1~bpo9

pve-cluster: 4.0-48

qemu-server: 4.0-101

pve-firmware: 2.0-6

libpve-common-perl: 4.0-83

libpve-access-control: 4.0-19

libpve-storage-perl: 4.0-70

pve-libspice-server1: 0.12.8-1

vncterm: 1.2-1

pve-docs: 5.3-1

pve-qemu-kvm: 2.7.0-9

pve-container: 1.0-88

pve-firewall: 3.0-16

pve-ha-manager: 2.0-5

ksm-control-daemon: 1.2-2

glusterfs-client: 3.5.2-2+deb8u5

lxc-pve: 2.0.6-2

lxcfs: 3.0.2-2

criu: 1.6.0-1

novnc-pve: 1.0.0-2

smartmontools: 6.5+svn4324-1~pve80

zfsutils: 0.6.5.8-pve13~bpo80So I am unsure, why 4.4 is behaving this way, since I was on 4.4 before (and I had all updates installed, etc.). I hope someone smarter than I am has an idea on how to solve this issue.

Thank you all.

Cheers,

Eddi

@eddi1984

Do not overtake other threads and reopen old ones

They TO here i using PVE 3 you PVE4. You talk about Container, so you would use OpenVZ which are normally not support anymore. So you have to "convert" it to LXC.

And please read the Console Output:

Do not overtake other threads and reopen old ones

They TO here i using PVE 3 you PVE4. You talk about Container, so you would use OpenVZ which are normally not support anymore. So you have to "convert" it to LXC.

And please read the Console Output:

If you will do this, you self know whats going on and can share it with us.lxc-start: tools/lxc_start.c: main: 365 The container failed to start.

lxc-start: tools/lxc_start.c: main: 367 To get more details, run the container in foreground mode.

lxc-start: tools/lxc_start.c: main: 369 Additional information can be obtained by setting the --logfile and --logpriority options.

TASK ERROR: command 'lxc-start -n 905' failed: exit code 1

You are not running the latest version of PVE - try to upgrade and re-check it.proxmox-ve: 5.3-1

@eddi1984

Do not overtake other threads and reopen old ones

They TO here i using PVE 3 you PVE4. You talk about Container, so you would use OpenVZ which are normally not support anymore. So you have to "convert" it to LXC.

And please read the Console Output:

If you will do this, you self know whats going on and can share it with us.

You are not running the latest version of PVE - try to upgrade and re-check it.

Hi,

thanks for your reply. After reading, I noticed that I posted in the wrong tread and this one is from 2015.

I mend to post in this thread: https://forum.proxmox.com/threads/node-with-question-mark.41180/

Is is possible to move my message over, or should I just copy paste?

Thx

I mend to post in this thread: https://forum.proxmox.com/threads/node-with-question-mark.41180/

Is is possible to move my message over, or should I just copy paste?

Maybe just post a new thread and add a link to the other existing one, so the (thread) connection can be easily found but the other, already cluttered thread, does not get even more cluttered.

Often, while it seems like the same issue it may just be the same symptom with a different cause, so having a separate thread is often not a bad idea.