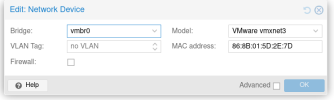

No firewall applied, it is a test system

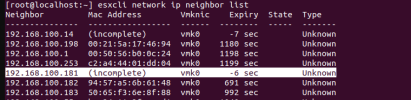

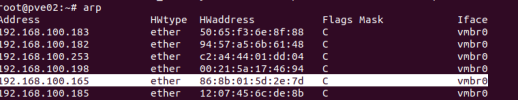

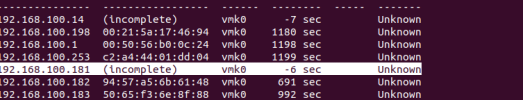

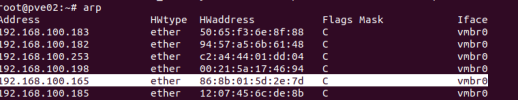

Problem on ARP level OSI layer2

PMX host bridge preventing ARP broadcast traffic form local VMs and itself to locally hosted ESXi VM only.

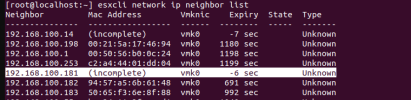

ESXi host

* 192.168.100.14 ip address another VM on host A => incomplete

* 192.168.100.1 VM hosted by ESXi

*192.168.100.182 and 183 another PMX host

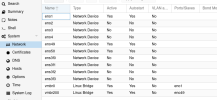

PMX host A

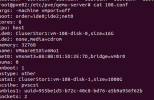

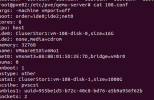

CAT-ing out the conf of ESXi

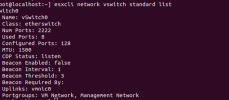

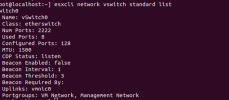

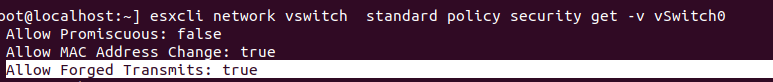

ESXI switch parameters

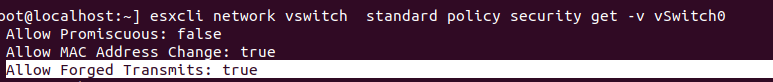

ESXi Firewall settings

root@localhost:~] esxcli network firewall ruleset list

Name Enabled

------------------------ -------

sshServer true

sshClient false

nfsClient false

nfs41Client false

dhcp true

dns true

snmp true

ntpClient false

CIMHttpServer true

CIMHttpsServer true

CIMSLP true

iSCSI false

vpxHeartbeats true

updateManager true

faultTolerance true

webAccess true

vMotion true

vSphereClient true

activeDirectoryAll false

NFC true

HBR true

ftpClient false

httpClient false

gdbserver false

DVFilter false

DHCPv6 false

DVSSync true

syslog false

IKED false

WOL true

vSPC false

remoteSerialPort false

vprobeServer false

rdt true

cmmds true

vsanvp true

rabbitmqproxy true

ipfam false

vvold false

iofiltervp false

esxupdate false

vit false

vsanhealth-multicasttest false

Rest of the test unnecessary because having no ARP resolution any kinda trafic will not work.

OSI Layer 3

ICMP (host 192.168.100.181 => ESXi 192.168.100.165)

Echo request only

root@pve02:~# tcpdump -envi vmbr0 icmp

tcpdump: listening on vmbr0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

11:37:27.554354 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 54705, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 1, length 64

11:37:28.558443 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 55158, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 2, length 64

11:37:30.707025 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 56095, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 3, length 64

11:37:30.707034 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 56475, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 4, length 64

11:37:31.630469 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 57399, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 5, length 64

11:37:32.654483 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 57446, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 6, length 64

11:37:33.678479 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 58174, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 7, length 64

11:37:34.702436 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 58441, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 8, length 64

11:37:35.726472 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 58863, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 9, length 64

11:37:36.750468 94:57:a5:6b:20:3c > 86:8b:01:5d:2e:7d, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 58969, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.100.181 > 192.168.100.165: ICMP echo request, id 61436, seq 10, length 64

Ping from ESXi (192.168.100.165) to PMX Host A (192.168.100.181)

(SSH session)

PING 192.168.100.181 (192.168.100.181): 56 data bytes

sendto() failed (Host is down)

Ping from ESXi (192.168.100.165) to an ESXi hosted VM

[root@localhost:~] ping 192.168.100.182

PING 192.168.100.182 (192.168.100.182): 56 data bytes

64 bytes from 192.168.100.182: icmp_seq=0 ttl=64 time=0.350 ms