Hello,

I recently migrated from VMware ESXi to Proxmox VE and I’m still new to the platform.

Server (Hetzner Dedicated)

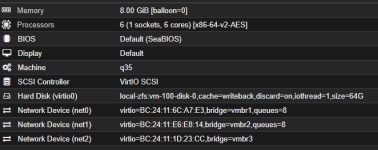

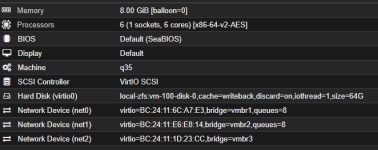

First VM is OPNsense, which handles all routing.

1) CPU Model – Host vs x86-64-v4-AES

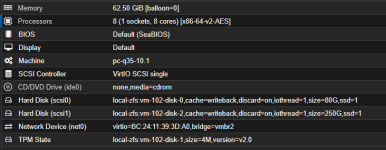

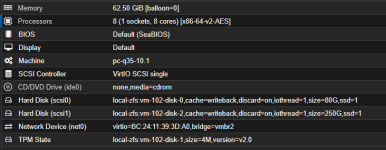

I created a Windows Server 2025 VM for SQL Server.

Most sources recommend using host CPU, but in my case it performed much worse.

Questions:

2) 10 Gbit VirtIO Network

Questions:

I recently migrated from VMware ESXi to Proxmox VE and I’m still new to the platform.

Server (Hetzner Dedicated)

- AMD EPYC 9454P (48C / 96T)

- 512 GB DDR5

- 4 × 1.92 TB NVMe (ZFS RAID10)

- 10 Gbit uplin

First VM is OPNsense, which handles all routing.

1) CPU Model – Host vs x86-64-v4-AES

I created a Windows Server 2025 VM for SQL Server.

- With CPU model = host:

- Windows felt very sluggish

- SQL performance was extremely poor

- After switching to x86-64-v4-AES:

- Windows became smooth

- SQL performance improved significantly

Most sources recommend using host CPU, but in my case it performed much worse.

Questions:

- Which CPU model is recommended for AMD EPYC 9454P?

- Is there a known issue with host CPU on Proxmox + AMD?

- Any BIOS or Proxmox settings I should check?

2) 10 Gbit VirtIO Network

- Windows VM shows 10 Gbit VirtIO NIC

- Real-world tests:

- Download ~2 Gbit

- Upload ~1 Gbit

Questions:

- Are these speeds normal?

- Any recommended tuning (MTU, multi-queue, CPU pinning, offloading)?