I have a 3-node cluster and yesterday I performed an upgrade in all nodes from PVE 7.4 to PVE 8.0.4. The upgrade went well and all guests are running without issues.

I use WHMCS with the Proxmox VPS for WHMCS module to sell virtualization products in my website.

Today I tried to destroy a LXC-based service using the "Terminate" module action but it couldn't, stating the error message:

So I logged in the web GUI to attempt to manually delete the user automatically created by the module when the service was created, but a similar error message appeared:

In my last attempt I tried to run the command

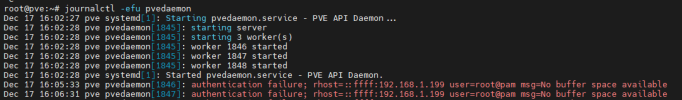

I couldn't find much about this issue. I read in a related issue post that restarting

All three nodes are fully updated:

I use WHMCS with the Proxmox VPS for WHMCS module to sell virtualization products in my website.

Today I tried to destroy a LXC-based service using the "Terminate" module action but it couldn't, stating the error message:

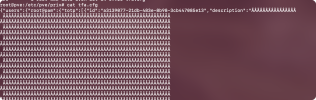

Code:

Delete user failed: No buffer space availableSo I logged in the web GUI to attempt to manually delete the user automatically created by the module when the service was created, but a similar error message appeared:

Code:

delete user failed: No buffer space available (500)In my last attempt I tried to run the command

pveum user delete "proxmoxVPS_<user>@pve" on the console and the same error appeared:

Code:

❯ pveum user delete "proxmoxVPS_<user>@pve"

delete user failed: No buffer space availableI couldn't find much about this issue. I read in a related issue post that restarting

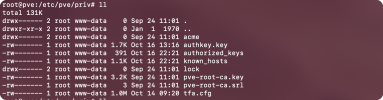

pvestatd before trying again would help, but unfortunately it was not my case.All three nodes are fully updated:

Code:

❯ pveversion

pve-manager/8.0.4/d258a813cfa6b390 (running kernel: 6.2.16-15-pve)

Last edited: