Good day!

I want to migrate running VM between 2 Proxmox nodes(10.105.2.29 -> 10.105.2.21), joined in cluster.

But migration task failed("VM 101 is not running") AND all VM disks were erased.

Result: locked stopped VM without any disks on old node AND no the VM on new node.

Excluding resentment, I have 2 questions:

1) Why VM wasn't migrate?

2) What is the problem in the logic of proxmox when it erase all disks BEFORE get success confirmation from main Migration task ?

Its serious problem, you know. For now i scared to migrate any vm or lxc because without backup i can get erased VM from proxmox.

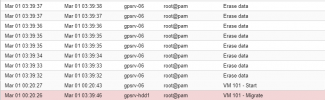

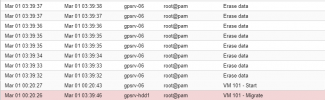

Migration task full log in attachments(migration.zip). Short part:

Some common info:

I want to migrate running VM between 2 Proxmox nodes(10.105.2.29 -> 10.105.2.21), joined in cluster.

But migration task failed("VM 101 is not running") AND all VM disks were erased.

Bash:

10.105.2.29

ls -la /mnt/storage1/images/101

total 8

drwxr----- 2 root root 4096 Feb 28 18:30 .

drwxr-xr-x 4 root root 4096 Mar 1 10:30 ..

10.105.2.21

ls -la /mnt/storage1/images

total 48

drwxr-xr-x 12 root root 4096 Mar 1 03:39 .

drwxr-xr-x 8 root root 4096 Feb 28 20:44 ..

drwxr----- 2 root root 4096 Feb 28 20:44 130

drwxr-xr-x 2 root root 4096 Feb 28 20:54 131

drwxr----- 2 root root 4096 Feb 28 20:45 132

drwxr----- 2 root root 4096 Feb 28 20:45 133

drwxr----- 2 root root 4096 Feb 28 20:45 134

drwxr----- 2 root root 4096 Feb 28 21:11 390

drwxr----- 2 root root 4096 Feb 28 22:38 397

drwxr----- 2 root root 4096 Feb 28 22:38 499

drwxr----- 2 root root 4096 Feb 28 21:40 530

drwxr----- 2 root root 4096 Feb 28 21:11 811Excluding resentment, I have 2 questions:

1) Why VM wasn't migrate?

2) What is the problem in the logic of proxmox when it erase all disks BEFORE get success confirmation from main Migration task ?

Its serious problem, you know. For now i scared to migrate any vm or lxc because without backup i can get erased VM from proxmox.

Migration task full log in attachments(migration.zip). Short part:

2021-03-01 00:20:26 starting migration of VM 101 to node 'gpsrv-06' (10.105.2.21)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-0.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-1.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-2.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-3.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-4.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-5.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-6.qcow2' (in current VM config)

2021-03-01 00:20:26 found local disk 'storage1:101/vm-101-disk-7.qcow2' (in current VM config)

2021-03-01 00:20:26 copying local disk images

2021-03-01 00:20:26 starting VM 101 on remote node 'gpsrv-06'

2021-03-01 00:20:43 start remote tunnel

2021-03-01 00:20:44 ssh tunnel ver 1

2021-03-01 00:20:44 starting storage migration

2021-03-01 00:20:44 scsi1: start migration to nbd:unix:/run/qemu-server/101_nbd.migrate:exportname=drive-scsi1

drive mirror is starting for drive-scsi1

drive-scsi1: transferred: 0 bytes remaining: 214748364800 bytes total: 214748364800 bytes progression: 0.00 % busy: 1 ready: 0

drive-scsi1: transferred: 132120576 bytes remaining: 214616244224 bytes total: 214748364800 bytes progression: 0.06 % busy: 1 ready: 0

...

2021-03-01 03:38:22 migration xbzrle cachesize: 2147483648 transferred 64336101 pages 144111 cachemiss 958002 overflow 1722

2021-03-01 03:38:23 migration speed: 1.38 MB/s - downtime 93 ms

2021-03-01 03:38:23 migration status: completed

drive-scsi5: transferred: 214778576896 bytes remaining: 0 bytes total: 214778576896 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi6: transferred: 2199023255552 bytes remaining: 0 bytes total: 2199023255552 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi3: transferred: 107374182400 bytes remaining: 0 bytes total: 107374182400 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi7: transferred: 107522359296 bytes remaining: 0 bytes total: 107522359296 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi0: transferred: 36333748224 bytes remaining: 0 bytes total: 36333748224 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi4: transferred: 536870912000 bytes remaining: 0 bytes total: 536870912000 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi2: transferred: 214748364800 bytes remaining: 0 bytes total: 214748364800 bytes progression: 100.00 % busy: 0 ready: 1

drive-scsi1: transferred: 214818029568 bytes remaining: 0 bytes total: 214818029568 bytes progression: 100.00 % busy: 0 ready: 1

all mirroring jobs are ready

drive-scsi5: Completing block job...

drive-scsi5: Completed successfully.

drive-scsi6: Completing block job...

drive-scsi6: Completed successfully.

drive-scsi3: Completing block job...

drive-scsi3: Completed successfully.

drive-scsi7: Completing block job...

drive-scsi7: Completed successfully.

drive-scsi0: Completing block job...

drive-scsi0: Completed successfully.

drive-scsi4: Completing block job...

drive-scsi4: Completed successfully.

drive-scsi2: Completing block job...

drive-scsi2: Completed successfully.

drive-scsi1: Completing block job...

drive-scsi1: Completed successfully.

drive-scsi5: Cancelling block job

drive-scsi6: Cancelling block job

drive-scsi3: Cancelling block job

drive-scsi7: Cancelling block job

drive-scsi0: Cancelling block job

drive-scsi4: Cancelling block job

drive-scsi2: Cancelling block job

drive-scsi1: Cancelling block job

drive-scsi5: Cancelling block job

drive-scsi6: Cancelling block job

drive-scsi3: Cancelling block job

drive-scsi7: Cancelling block job

drive-scsi0: Cancelling block job

drive-scsi4: Cancelling block job

drive-scsi2: Cancelling block job

drive-scsi1: Cancelling block job

2021-03-01 03:39:38 ERROR: Failed to complete storage migration: mirroring error: VM 101 not running

2021-03-01 03:39:38 ERROR: migration finished with problems (duration 03:19:12)

TASK ERROR: migration problems

Some common info:

Bash:

NODE 1 "VM migrate from this"

pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.4.78-2-pve: 5.4.78-2

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-3

libpve-guest-common-perl: 3.1-4

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-6

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.8-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-5

pve-cluster: 6.2-1

pve-container: 3.3-3

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-1

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-5

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Bash:

NODE 2 "VM migrate to this"

proxmox-ve: 6.3-1 (running kernel: 5.4.98-1-pve)

pve-manager: 6.3-4 (running version: 6.3-4/0a38c56f)

pve-kernel-5.4: 6.3-5

pve-kernel-helper: 6.3-5

pve-kernel-5.4.98-1-pve: 5.4.98-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-4

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.8-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-5

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.2.0-2

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-5

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.3-pve1

Bash:

pvecm status

Cluster information

-------------------

Name: GpsrvCluster

Config Version: 9

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Mar 1 11:41:51 2021

Quorum provider: corosync_votequorum

Nodes: 7

Node ID: 0x00000003

Ring ID: 1.83be

Quorate: Yes

Votequorum information

----------------------

Expected votes: 7

Highest expected: 7

Total votes: 7

Quorum: 4

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.105.2.27

0x00000002 1 192.168.240.82

0x00000003 1 10.105.2.29 (local)

0x00000004 1 10.105.2.23

0x00000005 1 10.105.2.11

0x00000006 1 10.105.2.13

0x00000007 1 10.105.2.21

Bash:

qm config 101

bootdisk: scsi0

cores: 12

description: %D0%9D%D0%B0%D0%B7%D0%BD%D0%B0%D1%87%D0%B5%D0%BD%D0%B8%D0%B5 %D0%92%D0%9C%3A %D0%9E%D1%81%D0%BD%D0%BE%D0%B2%D0%BD%D0%BE%D0%B9 GitLab%0A%D0%9F%D1%80%D0%BE%D0%B5%D0%BA%D1%82%3A Any%0A%D0%9E%D1%82%D0%B2%D0%B5%D1%82%D1%81%D1%82%D0%B2%D0%B5%D0%BD%D0%BD%D1%8B%D0%B9%3A %D0%9F%D0%B0%D0%B2%D0%BB%D0%BE%D0%B2 %D0%90%D0%BD%D0%B4%D1%80%D0%B5%D0%B9, %D0%A1%D0%BE%D0%BB%D0%B4%D0%B0%D1%82%D0%BE%D0%B2 %D0%94%D0%BC%D0%B8%D1%82%D1%80%D0%B8%D0%B9%0A%0AIP%3A 10.105.2.150%0A%D0%94%D0%BE%D1%81%D1%82%D1%83%D0%BF ssh%3A%0A1) %D1%81 10.105.2.98 %D0%BF%D0%BE ssh-%D0%BA%D0%BB%D1%8E%D1%87%D1%83

ide2: none,media=cdrom

memory: 16384

name: GitLab

net0: virtio=E6:6E:D4:05:44:53,bridge=vmbr010

net1: virtio=9E:D8:70:F2:B9:89,bridge=vmbr010

numa: 0

onboot: 1

ostype: l26

scsi0: storage1:101/vm-101-disk-0.qcow2,size=32G

scsi1: storage1:101/vm-101-disk-1.qcow2,size=200G

scsi2: storage1:101/vm-101-disk-2.qcow2,size=200G

scsi3: storage1:101/vm-101-disk-3.qcow2,size=100G

scsi4: storage1:101/vm-101-disk-4.qcow2,size=500G

scsi5: storage1:101/vm-101-disk-5.qcow2,size=200G

scsi6: storage1:101/vm-101-disk-6.qcow2,size=2T

scsi7: storage1:101/vm-101-disk-7.qcow2,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=020bf5e1-1b93-49cc-9a06-44e154cdecac

sockets: 1Attachments

Last edited: