So after scouring through all previous forum posts, I still can't seem to find a clear answer on how Proxmox actually deal with Numa nodes.

My setup

Epyc 7663 56c112t (yes it might be a bit unoptimal, I will try to switch to a 7b13 with full 64c128t later)

8x64gb ddr4 512gb total

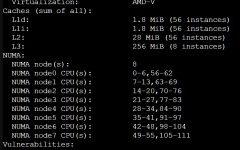

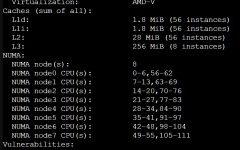

BIOS set to expose CCX as Numa domain so in total I have 8 NUMA with each can access 64gb ram and 32mb of L3 cache with 7 real cores + 7 hyperthreads for a total of 14 cpus.

lscpu:

Now I have 3 questions:

1. For VMs that has less vCPUs than real CPUs in a NUMA node, for example, 6 CPU (<7 real cores). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on the same NUMA node so they can access the same L3 cache.

2. For VMs that has more vCPUs than real CPUs in a NUMA node, for example, 8 CPU (7 real cores < 8 vCPU < 14 total). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on the same NUMA node, switching between real and hyperthreaded if needed so they can access the same L3 cache. I'm assuming a hyperthreaded CPU should have less of an impact than having to cross CCX to another memory domain and L3 cache.

3. For VMs that has more vCPUs than total CPUs in a NUMA node, for example, 28 CPU (> 14 total CPU). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on 2 NUMA nodes, switching between real and hyperthreaded if needed so they can access the same L3 cache. I'm assuming a hyperthreaded CPU should have less of an impact than having to cross CCX to another memory domain and L3 cache. At worst, there will only be 1 domain crossing between NUMA 1 <-> NUMA 2 and all tasks inside the VM, if use below 14 vCPU, should preferably stay on one NUMA node to benefit from the same L3 cache and Memory access.

Thanks in advance!

My setup

Epyc 7663 56c112t (yes it might be a bit unoptimal, I will try to switch to a 7b13 with full 64c128t later)

8x64gb ddr4 512gb total

BIOS set to expose CCX as Numa domain so in total I have 8 NUMA with each can access 64gb ram and 32mb of L3 cache with 7 real cores + 7 hyperthreads for a total of 14 cpus.

lscpu:

Now I have 3 questions:

1. For VMs that has less vCPUs than real CPUs in a NUMA node, for example, 6 CPU (<7 real cores). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on the same NUMA node so they can access the same L3 cache.

2. For VMs that has more vCPUs than real CPUs in a NUMA node, for example, 8 CPU (7 real cores < 8 vCPU < 14 total). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on the same NUMA node, switching between real and hyperthreaded if needed so they can access the same L3 cache. I'm assuming a hyperthreaded CPU should have less of an impact than having to cross CCX to another memory domain and L3 cache.

3. For VMs that has more vCPUs than total CPUs in a NUMA node, for example, 28 CPU (> 14 total CPU). How do I configure the optimal config? Should I enable NUMA in VM setting?

- Optimal IMO should be all the VM threads on 2 NUMA nodes, switching between real and hyperthreaded if needed so they can access the same L3 cache. I'm assuming a hyperthreaded CPU should have less of an impact than having to cross CCX to another memory domain and L3 cache. At worst, there will only be 1 domain crossing between NUMA 1 <-> NUMA 2 and all tasks inside the VM, if use below 14 vCPU, should preferably stay on one NUMA node to benefit from the same L3 cache and Memory access.

Thanks in advance!