(EDIT: Changed "primarycache" to "secondarycache", based on input from following post by Dunuin)

L2ARC makes sense if you need even more cache but you already populated all your RAM slots with the biggest possible DIMMs.

Great way of putting it, thanks!

Special devices aren't a metadata cache. They are a metadata storage. If you loose your special devices all data of the pool is lost. If you just want a metadata cache SSD use a L2ARC SSD and set it to "secondarycache=metadata".

I didn't even know about the "secondarycache=metadata" option. Thanks! It seems like a good intermediate solution. Since it's just a cache, we don't need to worry about data loss in case of hardware failure, so you only need a single drive (no mirror).

Its more useful if your got slow HDDs and want some SSDs for the metadata so the IOPS limited HDDs aren't hit but so much small random reads/writes.

This is my exact scenario. All my ZFS volumes are currently running on spinning HDDs. Benchmarking with fio shows good read performance, but horrible performance on random writes. This is why I was leaning towards setting up a SLOG mirror, but as you said before, it will only help with synchronous write.

if your RAM is adequately sized, most of the metadata should be cached in ARC anyway for zvols, but you can verify that with arcstat/arc_summary, and if that is the bottle neck, maxing out the RAM first is probably a good idea.

This is a great way of looking at it. If I've maxed out RAM, but metadata is falling out of the ARC cache, I can use Dunuin's idea of an L2ARC SSD set to secondarycache=metadata.

for a 1G zvol you can see 1 very small L2 object that holds the references to 128 small L1 objects which hold the references to 128K L0 data objects. the L0 data objects each represent one block (8k default volblocksize, so that will affect the ratio of metadata:data as well). for a more concrete real world example, one of my pools has 656G of zvol data objects, and roughly 9G of associated metadata objects which would be stored on a special vdev.

Very interesting. So when using a zvol, the metadata stores information about each block (8k by default). In your example, 656GiB / 8K volbocksize = ~86 million metadata entries. Since you had ~9GiB of metadata objects, we get an object size of ~112 bytes per volblock. This yields the following formula for calculating the expected metadata size of a zvol:

zvol size (GiB) / volblocksize (KiB) * 112 = metadata size (MiB)

656 / 8 * 112 = 9,184 MiB

Here's a table I put together to help me visualize how Datasets and Zvols compare. I'm using the term "Chunk" here for lack of a better word.

| Dataset | ZVol |

|---|

| ZFS "Chunk" Size Setting | recordsize (default = 128KiB) | volblocksize (default = 8KiB) |

| Metadata stores "Chunk" checksum | Yes | Yes |

| Metadata can store small data objects | Yes (see "special_small_blocks" setting) | No |

Assuming a "chunk's" 256-bit checksum is stored in it's metadata object, then an write to the block (async or sync) would incur a checksum calculation and write to the metadata storage. Therefore,

offloading the metadata storage to a special device has the potential to enhance async & sync write performance of a Zvol.

Unanswered Questions

- Would an L2ARC SSD set to secondarycache=metadata have the same write performance benefits as a special device? Intuition says no, because the L2ARC cache only accelerates read performance.

- Are there any write performance benefits of implementing a metadata special device, if a fast SLOG is already configured? Foundational questions:

- Would a write to the ZFS metadata be handled by an SLOG device?

- Does ZFS write to metadata synchronously?

Performance Testing

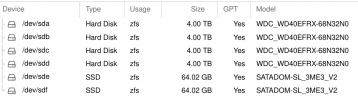

I'm going to be working on this next week, so should have some feedback after that. The plan is to configure a PVE node to boot several VMs on a ZFS pool. Each VM will run a Windows workload simulator. Here's the 6 configurations I want to test:

| Metadata on HDDs | L2ARC SSD (secondarycache=metadata) | Metadata Special Device Mirror |

| No SLOG / ZIL | | | |

| SLOG / ZIL Mirror | | | |