Hello,

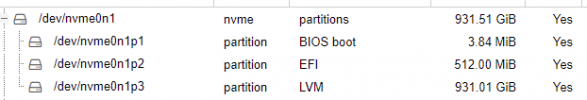

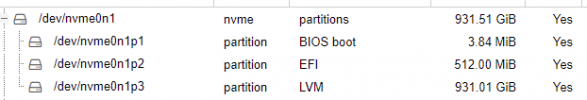

Today I used CloneZilla to image my original 256GB NVMe disk onto a new 1 TB NVMe disk, with the option to resize the partitions proportionally. It looks like CloneZilla did what it is supposed to, the system boots up and if I go into the Disks view within Proxmox I see it now says 931.5 GB.

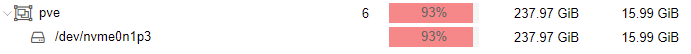

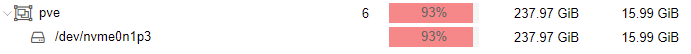

If I go to LVM under disks though I still only see the original 237.97 GB.

If I try to create a new LVM (just to see if it shows available space it doesnt show any as available.

Is there a way for me to extend the LVM so I can actually use all of the space? Any help would be appreciated, hate to have a 1 TB disk that I cant fully use.

Here is my partition table as well:

Here is my version table:

Today I used CloneZilla to image my original 256GB NVMe disk onto a new 1 TB NVMe disk, with the option to resize the partitions proportionally. It looks like CloneZilla did what it is supposed to, the system boots up and if I go into the Disks view within Proxmox I see it now says 931.5 GB.

If I go to LVM under disks though I still only see the original 237.97 GB.

If I try to create a new LVM (just to see if it shows available space it doesnt show any as available.

Is there a way for me to extend the LVM so I can actually use all of the space? Any help would be appreciated, hate to have a 1 TB disk that I cant fully use.

Here is my partition table as well:

Code:

fdisk -l | grep ^/dev

Partition 2 does not start on physical sector boundary.

/dev/nvme0n1p1 34 7900 7867 3.9M BIOS boot

/dev/nvme0n1p2 7901 1056476 1048576 512M EFI System

/dev/nvme0n1p3 1056477 1953525134 1952468658 931G Linux LVMHere is my version table:

Code:

proxmox-ve: 6.3-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.3-6 (running version: 6.3-6/2184247e)

pve-kernel-5.4: 6.3-8

pve-kernel-helper: 6.3-8

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.8

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-9

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.1-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-1

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-5

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-10

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

Last edited: