Recently the primary non-os disk for my proxmox install is showing as 100% free despite also showing it contains several hundred GB of data on it. Any VM that attempts to boot that was on that disk is unable to boot. And attempting to create a new VM using that disk also fails. I'm suspecting a dead drive, but I'd like to see if it's able to be recovered before replaced to grab a bit of data off it first.

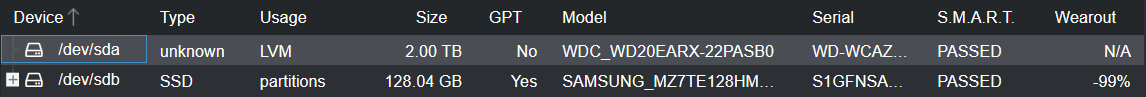

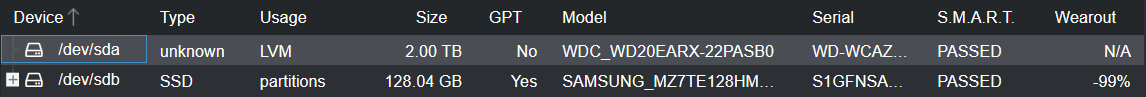

The disk in question is /dev/sda2. I have it configured for LVM-thin with no raid. It purely contains VM/LXC disks, the OS is on /dev/sdb

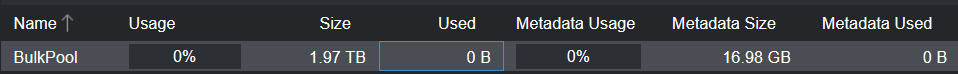

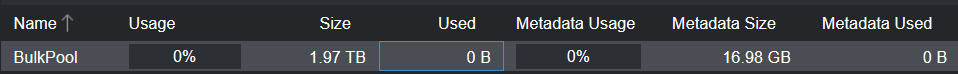

Looking at the LVM-Thin menu it shows the disk as 0% used:

However when looking at the disk under the storage view I see:

I'm not sure where to go from here. If it's a dead drive with no way to recover or if there's a chance of getting some of my data off of here.

If it helps, the output of pveversion -v:

The disk in question is /dev/sda2. I have it configured for LVM-thin with no raid. It purely contains VM/LXC disks, the OS is on /dev/sdb

Looking at the LVM-Thin menu it shows the disk as 0% used:

However when looking at the disk under the storage view I see:

I'm not sure where to go from here. If it's a dead drive with no way to recover or if there's a chance of getting some of my data off of here.

If it helps, the output of pveversion -v:

Code:

root@proxmox:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-4-pve)

pve-manager: 7.0-11 (running version: 7.0-11/63d82f4e)

pve-kernel-5.11: 7.0-7

pve-kernel-helper: 7.0-7

pve-kernel-5.11.22-4-pve: 5.11.22-8

ceph-fuse: 15.2.14-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.3.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-6

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-10

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-4

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.9-2

proxmox-backup-file-restore: 2.0.9-2

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-6

pve-cluster: 7.0-3

pve-container: 4.0-9

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.3-1

pve-ha-manager: 3.3-1

pve-i18n: 2.5-1

pve-qemu-kvm: 6.0.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-13

smartmontools: 7.2-1

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1