This is my issue.

My proxmox host has 2 SSD's. One is for the host itself, and the other is for the virtual Disks for the VMs and containers.

If I run hdparm or dd directly on the host, I get speeds on the VM SSD disk of around 370-390 MB/s, which is what I would expect.

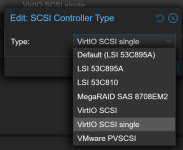

If I go into a VM (doesnt matter if its windows or Ubuntu), the write speeds drop to 60-70MB/s with caching off, and around 110-130MB/s with write back enabled.

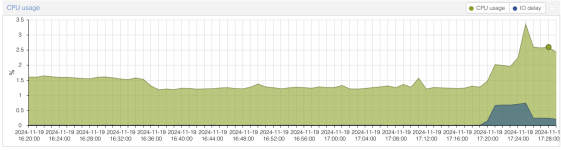

And with write back enabled, I get file transfers pausing for a few seconds when copying large files (6-8 GB).

So why is the write speed so slow when comparing performance from the host itself, and from within a VM ?

My proxmox host has 2 SSD's. One is for the host itself, and the other is for the virtual Disks for the VMs and containers.

If I run hdparm or dd directly on the host, I get speeds on the VM SSD disk of around 370-390 MB/s, which is what I would expect.

If I go into a VM (doesnt matter if its windows or Ubuntu), the write speeds drop to 60-70MB/s with caching off, and around 110-130MB/s with write back enabled.

And with write back enabled, I get file transfers pausing for a few seconds when copying large files (6-8 GB).

So why is the write speed so slow when comparing performance from the host itself, and from within a VM ?

Last edited: