C

Charlie

Guest

Which is faster disk type? QCOW2, RAW, VMDK? IDE or VirtIO? Cache or No Cache?

In order to identify the best disk type and bus method, we needed some rudimentary benchmarks. We've previously added some of this data in another thread; however, this has additional information and details, and is KVM (Proxmox) specific. There will be additional benchmark on their CPU usage coming soon.

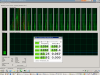

Here are the KVM Disk Types utilized by Proxmox VE - http://www.imagehousing.com/image/813009. On the right column for each disk type, you will see a NO CACHE option. This means the flag CACHE=NONE was used for that particular configuration. To our surprise, the VMDK format with VirtIO was the overall "faster" setup in this test. This thread has more details on the subject on Caching - http://arstechnica.com/civis/viewtopic.php?f=16&t=1143694

The tests were performed on guests machines running Windows 2008 R2 SP1 x64. Each test configuration were performed on a clean / unattended setup (standard configuration) of the Operating System. Each OS has 1256 MB of RAM allocated. The hardware used was a low-end Dell Precision 2.0 GHz dual-core with 7200 RPM 500 GB Seagate Barracuda Drive. We intend to repeat these tests on newer server class machine by Intel when a spare becomes available. We would also like to run these test all concurrently; however, more resources are required.

Optional Link to the tests - http://i51.tinypic.com/158bcl4.gif

In order to identify the best disk type and bus method, we needed some rudimentary benchmarks. We've previously added some of this data in another thread; however, this has additional information and details, and is KVM (Proxmox) specific. There will be additional benchmark on their CPU usage coming soon.

Here are the KVM Disk Types utilized by Proxmox VE - http://www.imagehousing.com/image/813009. On the right column for each disk type, you will see a NO CACHE option. This means the flag CACHE=NONE was used for that particular configuration. To our surprise, the VMDK format with VirtIO was the overall "faster" setup in this test. This thread has more details on the subject on Caching - http://arstechnica.com/civis/viewtopic.php?f=16&t=1143694

The tests were performed on guests machines running Windows 2008 R2 SP1 x64. Each test configuration were performed on a clean / unattended setup (standard configuration) of the Operating System. Each OS has 1256 MB of RAM allocated. The hardware used was a low-end Dell Precision 2.0 GHz dual-core with 7200 RPM 500 GB Seagate Barracuda Drive. We intend to repeat these tests on newer server class machine by Intel when a spare becomes available. We would also like to run these test all concurrently; however, more resources are required.

Optional Link to the tests - http://i51.tinypic.com/158bcl4.gif

Last edited by a moderator: