Hey all,

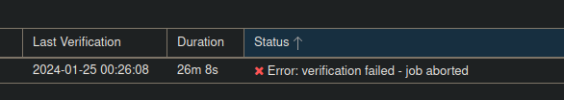

I'm seeing a weird behaviour on one of my PBS node. The GUI verify job which should run every day has been failing for a few days now. Upon further investigation, the verify job is trying to verify a non-existent (probably pruned) backup : (datastore name has been censored but isn't strictly relevant here)

Weirdly, launching a verify job from the CLI works perfectly.

Version :

proxmox-backup-server 3.1.2-1 running version: 3.1.2

The GC job runs everyday at 2100 with no issue.

Cheers

I'm seeing a weird behaviour on one of my PBS node. The GUI verify job which should run every day has been failing for a few days now. Upon further investigation, the verify job is trying to verify a non-existent (probably pruned) backup : (datastore name has been censored but isn't strictly relevant here)

Code:

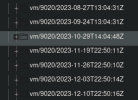

2024-01-24T12:47:18+01:00: verify hello-pbs2:vm/9020/2023-11-23T22:50:15Z

2024-01-24T12:47:18+01:00: check qemu-server.conf.blob

2024-01-24T12:47:18+01:00: check drive-scsi0.img.fidx

2024-01-24T12:48:19+01:00: verified 3681.25/21544.00 MiB in 60.41 seconds, speed 60.94/356.64 MiB/s (0 errors)

2024-01-24T12:48:19+01:00: TASK ERROR: verification failed - job aborted

Code:

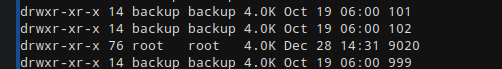

root@hellothere-PBS2:/mnt/datastore/hellothere-pbs2/vm/9020# ls -lah | grep 2023-11-2

drwxr-xr-x 2 backup backup 4.0K Jan 23 17:36 2023-11-26T22:50:10Z

root@hellothere-PBS2:/mnt/datastore/hellothere-pbs2/vm/9020#Weirdly, launching a verify job from the CLI works perfectly.

Code:

root@heya-PBS2:/mnt/datastore/wololo-pbs2/vm/9020# proxmox-backup-manager verify hellothere-pbs2

[...]

percentage done: 100.00% (47/47 groups)

TASK OKVersion :

proxmox-backup-server 3.1.2-1 running version: 3.1.2

The GC job runs everyday at 2100 with no issue.

Cheers