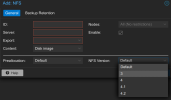

Hi I am attempting to disable nfs server version 4 on my proxmox VE 8.xx environment. I followed this guide here

and tried to update both the

and the

directly but with no luck. After restarting and doing

I get

Also when I try to view the process rpc.mount by running ps -ef |grep rpc.mount I see the process does not show any parameters next to it

Does anyone has any idea why this is happening and if there is a way to disable nfs 4 on proxmox 8.xx?

Thanks

Code:

https://unix.stackexchange.com/questions/205403/disable-nfsv4-server-on-debian-allow-nfsv3and tried to update both the

Code:

/etc/default/nfs-kernel-server

Code:

/etc/init.d/nfs-kernel-server

Code:

cat /proc/fs/nfsd/versions

Code:

+3 +4 +4.1 +4.2Also when I try to view the process rpc.mount by running ps -ef |grep rpc.mount I see the process does not show any parameters next to it

Code:

root 1883 1 0 15:43 ? 00:00:00 /usr/sbin/rpc.mountd

root 4069 2486 0 15:49 pts/0 00:00:00 grep rpc.mountDoes anyone has any idea why this is happening and if there is a way to disable nfs 4 on proxmox 8.xx?

Thanks